Military applications of robotics and AI are on the verge of deployment on the battlefield. Dubbed lethal Autonomous Weapons Systems (AWS), these could be the future of warfare, and experts continue to warn against its potential for destruction and disruption on a global scale.

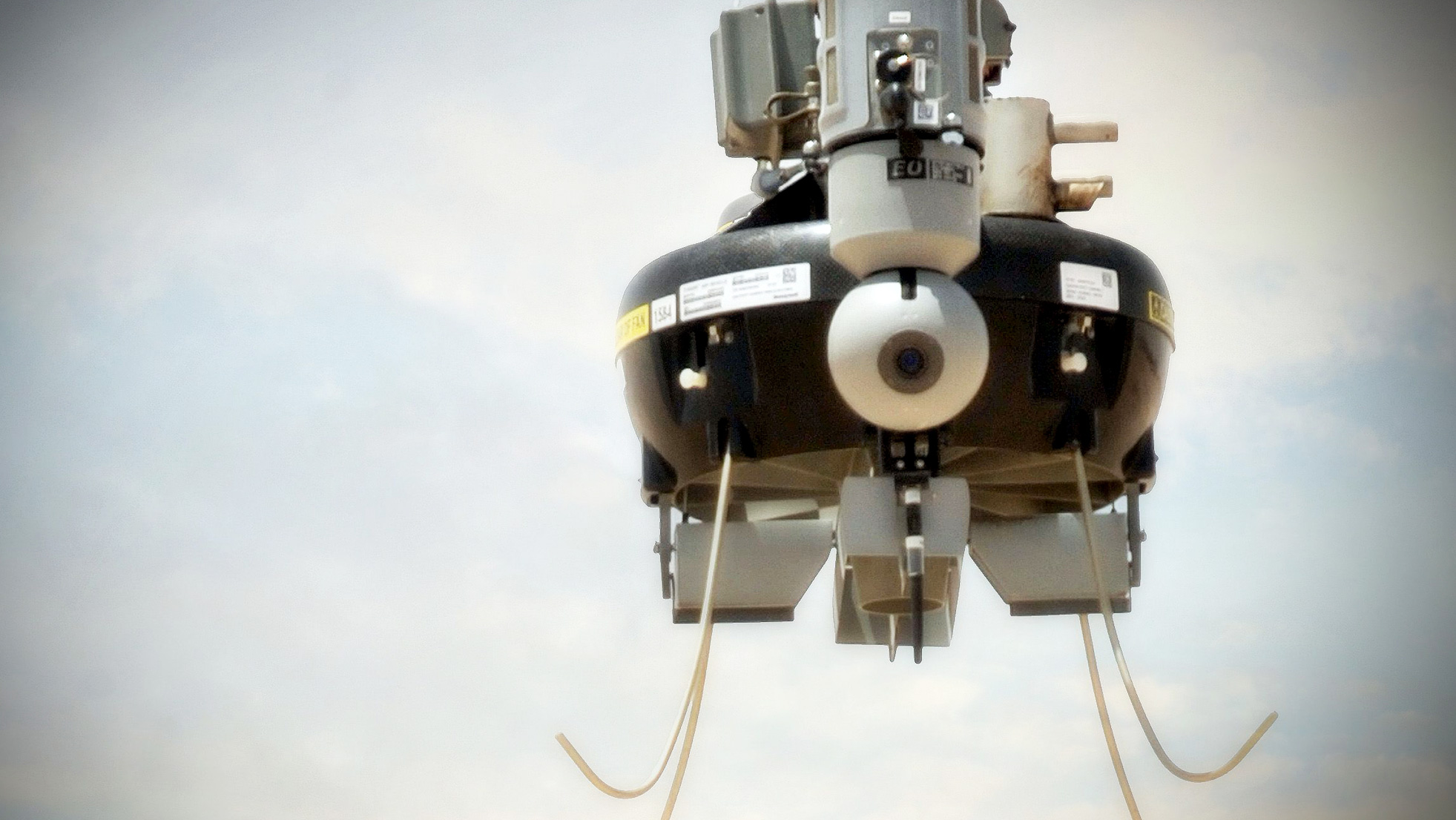

AWS can include anything from unmanned missile systems and drones to tomorrow’s intelligent robot warriors: machine learning is a versatile technology that has spurred the imagination of defense strategists and arms manufacturers.

An independent database lists 284 autonomous weapons systems already operational in many countries across the globe. Notably, most are developed by the top five arms exporters: Russia, China, the United States, France and Germany. Many of these are capable of targeting, moving and identifying targets with little to no human input.

In the future, the defense industry hopes to use AI to confer more complex abilities such as planning, communicating and even establishing mission objectives. This goal is particularly troubling: AI is unlike human minds and has different priorities due to the way it is programmed and the sources it learns from. This could potentially lead to disproportionate attacks, logical loops and unprovoked military actions, even if the absence of a technical failure.

The discussion on the ethics of AWS is getting louder as this reality sets in. While most countries agree that AWS require some degree of “meaningful human control” or similar safeguard, there is yet no single universal definition of AWS. Furthermore, AWS can come in many degrees of autonomy, the permissibility of each also a major topic of the ongoing discussion.

Aware that the implications of AWS are not only technological, but also political and ethical, many notable voices are increasingly asking for international cooperation aimed to implement autonomy limits or an outright ban on AWS before deployment in real conflicts begins.

In an open letter to the UN released on August 21 2017, Elon Musk and 115 more signatories expressed their concerns about the future of mankind, demanding stringent regulation on AWS. The release of the letter was prompted by the cancellation of a long-awaited first meeting of a UN-appointed Group of Governmental Experts on AWS.

“Lethal autonomous weapons threaten to become the third revolution in warfare. Once developed, they will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend. These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways. We do not have long to act. Once this Pandora’s box is opened, it will be hard to close,” warns the open letter.

Read More: Microsoft’s Acquisition of AI Startup Maluuba is like Hera’s Gift to Pandora

This is the latest of repeated calls for preventive measures against a military arms race of unmanned weaponry.

However much we prefer envisioning a zero-bloodshed future with robots destroying each other during military conflicts, a more likely scenario is that of swarms of lethal machines at the disposal of individual or political interests. At no human cost for the offensive party and no restriction for mass production beyond monetary cost, their potential for disrupting the post-WWII defense-oriented international stability is unprecedented.

Read More: ‘AI will represent a paradigm shift in warfare’: WEF predicts an Ender’s Game-like future

The famous Santayana quote, “Those who cannot remember the past are condemned to repeat it,” underlies the entire discussion on AWS regulation. The world is reflecting on the future of AI-controlled weapons under the shadow of past catastrophic outcomes of events such as Hiroshima and Nagasaki, the pinnacles of the last great arms race before the Cold War.

A nuclear strike is no longer seen as an easy one-hit win; since 1945 atomic bombs are mere deterrents for conflict because large-scale nuclear retaliation could obliterate life on Earth. For this reason, keeping humans in the loop at every step during wartime is not only sensible but necessary.

After all, an AI programmed to win would have no qualms about pressing that big red button.