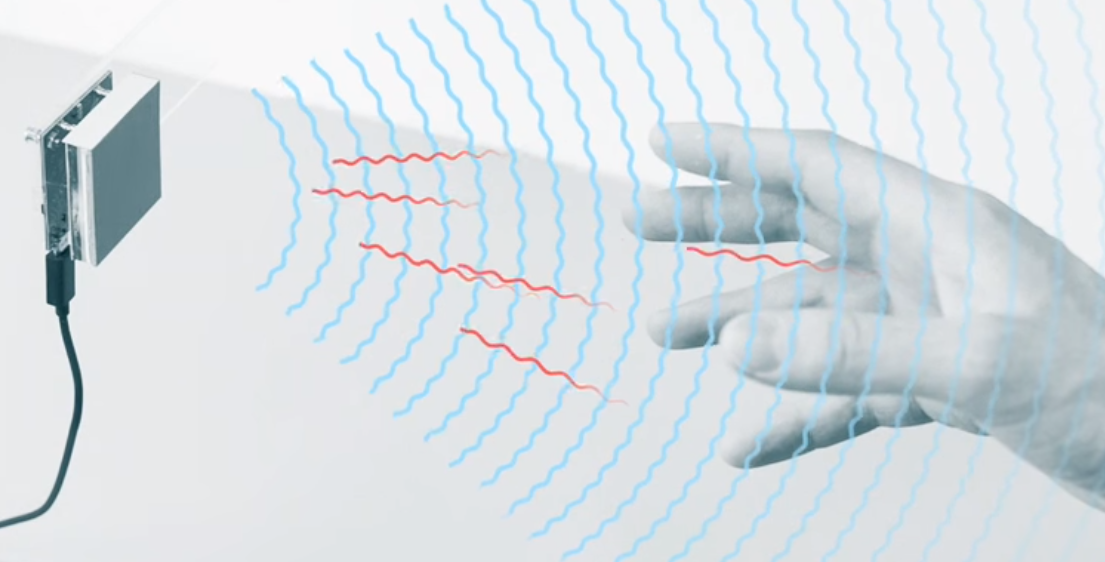

Google is developing a radar-based technology that allows for no-touch screens that are manipulated by your fingers in mid-air.

Project Soli by Google is the latest technology geared at solving the problem of fat, fumbling fingers on a tiny touch screen that has caused frustration for many with a tendency for erroneous swiping mistakes.

“We want to break the tension between the ever-shrinking screen sizes used in wearables, as well as other digital devices, and our ability to interact with them,” the company’s website boasts.

The demonstration videos make it appear that the microchip-based technology can be manipulated using twiddling motions with your fingers that appear you are pulling invisible strings in mid-air; or perhaps by playing the world’s smallest imaginary violin.

One can imagine the implications, especially for platforms like Tinder, where instead of swiping left or right, one can twiddle clockwise or counter-clockwise.

According to the Google announcement, “The Soli sensor can track sub-millimeter motions at high speed and accuracy. It fits onto a chip, can be produced at scale, and can be used inside even small wearable devices.”

Apart from solving the problem of having greasy fingerprints spread across shiny surfaces, the implications for potential future patents for touchless technology is vast.

This could mean the elimination or alteration of keys, remote controls, telephones, radios, etc., as well as potential military purposes (i.e. trigger-less guns). Remote detonations with the wave of a hand? The sky is literally the limit.

Read More: Private data collection: from Facebook apps to a generation of microchipped biohackers

“Capturing the possibilities of the human hand was one of my passions,” said Ivan Poupyrev from Google’s Advanced Technology and Projects (ATAP) group.

Project Soli’s Lead Research Engineer, Jaime Lien, added, “The reason why we’re able to interpret so much from this one radar signal is because of the full gesture recognition pipeline that we’ve built. The various stages of this pipeline are designed to extract specific gesture information from this one radar signal that we receive at a high frame rate.”

Google’s developers are hoping to mimic human intent by imitating the same motions your fingers would use on a physical object, only there would be no object physically present between your fingers.