Neuroscientists discuss technologies being developed to decode your brain in order to access thoughts and feelings that could be used against you in a court of law.

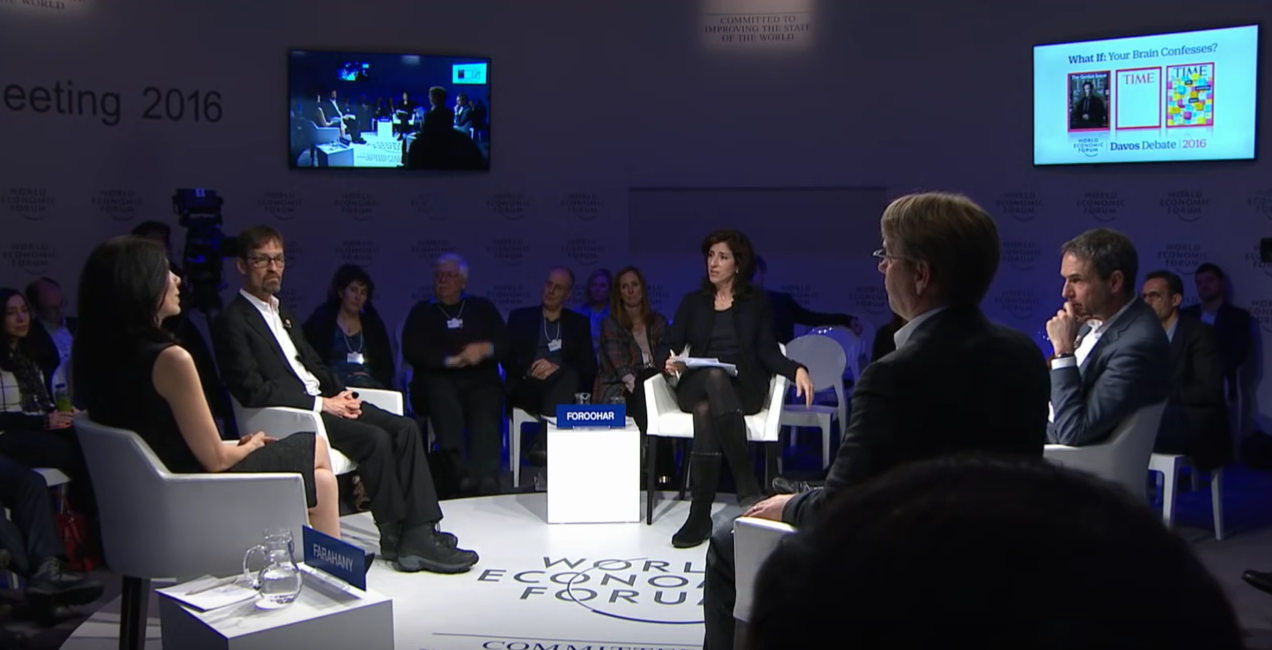

During a panel at the World Economic Forum in Davos, Switzerland dubbed “What if: Your Brain Confesses?” neuroscientists mulled over the implications of mapping the brain in a legal context, reminiscent of the film “Minority Report,” in which the government could hold people on trial for crimes they commit in the future.

The issues over decoding the brain for legal purposes demand serious ethical discussions with regards to individual privacy, self-incrimination, and suggestive memory.

Should governments protect freedom of thought?

“If we can get to the point where either you can have an unwilling suspect or an unwilling individual having their brain decoded in some sense, legal systems don’t bake-in any presumptions that we can do that, and so there is no legal protections that could be afforded to you,” said panelist Nita Farahany, professor of law and philosophy at Duke University.

Dr. Farahany went on to say that while there exists the protection of freedom of speech, the government does not yet have any precedence over freedom of thought — as if this discussion really needs to be had!

“So, I think we have to think about whether or not the brain is some special place of privacy. Is there some freedom of thoughts and not just freedom of speech that we need to be actively protecting?” Farahany went on to question.

“People have shown that they’re quite willing to give up privacy for convenience”

What is alarming is the total lack of awareness on the part of the panelists over basic human rights issues. Of course freedom of thought goes hand-in-hand with freedom of speech. How can the freedom or even the ability of speech exist without a brain to operate it?

“Anything that is in current, conscious awareness can potentially decoded, it’s just a matter of technologies,” said UC Berkeley professor and panelist Dr. Jack Gallant.

Dr. Gallant described technologies in which scientists were able to map linguistic patterns in the brain, such as syntax, phonetics, and semantics, that would then be used to decode “internal speech.”

Mapping internal speech for linguistic forensics

Linguistic forensics has already been a staple of the legal system in which prosecutors can call on specialists to identify speech and written language patterns to determine whether or not someone is guilty of perjury, or is in fact the culprit of a crime.

For example, a man who continued to send emails and texts disguised as his wife was later convicted of murdering his spouse, based on the analysis of the disguised messages he sent.

Now, researchers are looking to use that same line of linguistic forensics, only it would be applicable to internal speech within the brain that had never been uttered aloud.

What would be ideal for prosecutors would be to analyze a suspect’s brain activity regarding internal speech in order to establish guilt.

The trouble with memory

However, there is at least one GIGANTIC problem with this line of thinking, and that has to do with how memory actually works, and more specifically, how memories are not always “true.”

It has been proven over and over again, that memory is not like a VCR, in which events are recorded, and then played back with easy accessibility. On the contrary, peoples’ memories of events are often flawed, and they are very open to outside suggestion. A person can be made to believe he or she saw what they didn’t actually see under a number of contextual circumstances.

Implanting memories is nothing new either, nor is the difficult task in trying to determine guilt or innocence in a suspect that is a pathological liar, or has some other cognitive or psychological malady.

What the global elite in Davos were discussing; however, was how gullible the population is when it comes to giving up personal freedoms, and that this brain decoding technology should be available in the next 10 years.

“Personally, I think it’s just a matter of time before there will be a portable brain decoding technology that decodes language just as fast as you can type with your thumbs on a cell phone,” said Dr. Gallant.

“And everyone will wear them because people have shown that they’re quite willing to give up privacy for convenience, and then I think that brings up a lot of really interesting and scary ethical and privacy issues.”

“Scary ethical and privacy issues”

Can you imagine that you could be prosecuted based on a fictitious crime that exists only in your imagination, but could still be “recorded” by brain decoders. Given that prosecutors and law enforcement officers, as a general practice, often plant ideas in a suspect’s head that are self-incriminating, wrongful convictions are sure to follow, and who knows how nefariously the law will work to come up with a guilty conviction?

How many movies have you seen where the cop has the “perp” in a small room, sweating it out under the lights while the officer shouts, “Confess!”

Dr. Farahany even went so far to say that they are considering tracking “pain detection” in the brain as a means to coerce confessions from suspected individuals.

“One of the emerging areas that is really interesting among neuroscience is ‘pain detection,’ and once we understand the circuitries that cause pain, I guess the question is, ‘could we then instill pain and use that in any coercive measures within the legal system?'”

Did she actually suggest inflicting pain to gain a confession? Torture? See for yourself below.

Scary ethical and privacy issues, indeed!