A new stock image search engine, Everypixel, is beta testing its unique algorithm to measure the aesthetics of stock images through neural networks.

Everypixel’s team trained a neural network to see the beauty in photos the same way humans do. While the company specifically built the algorithm to identify and weed out the most aesthetically-pleasing stock images from the ugly ones, it also works with basically any type of image.

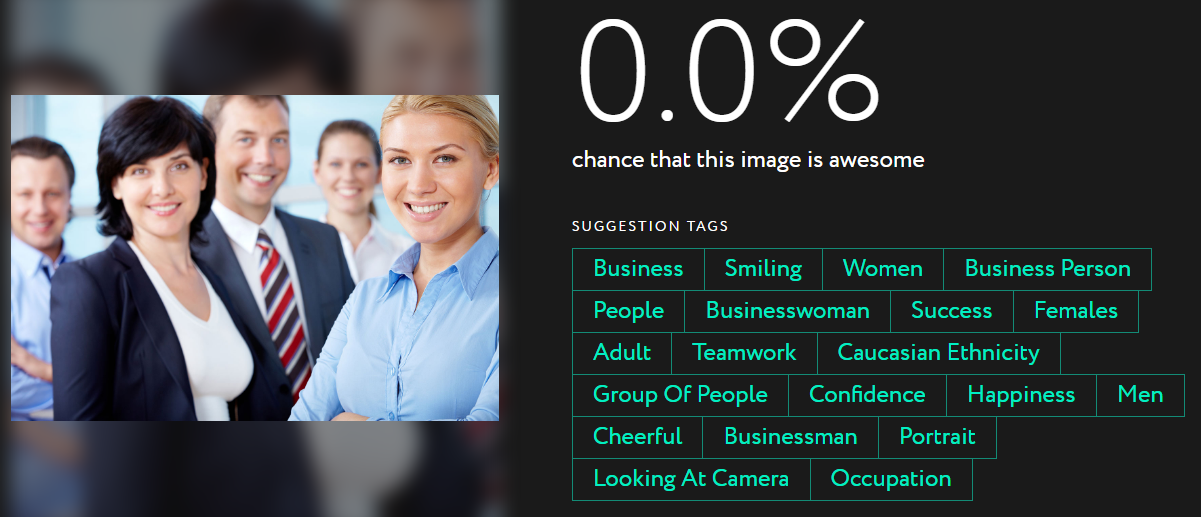

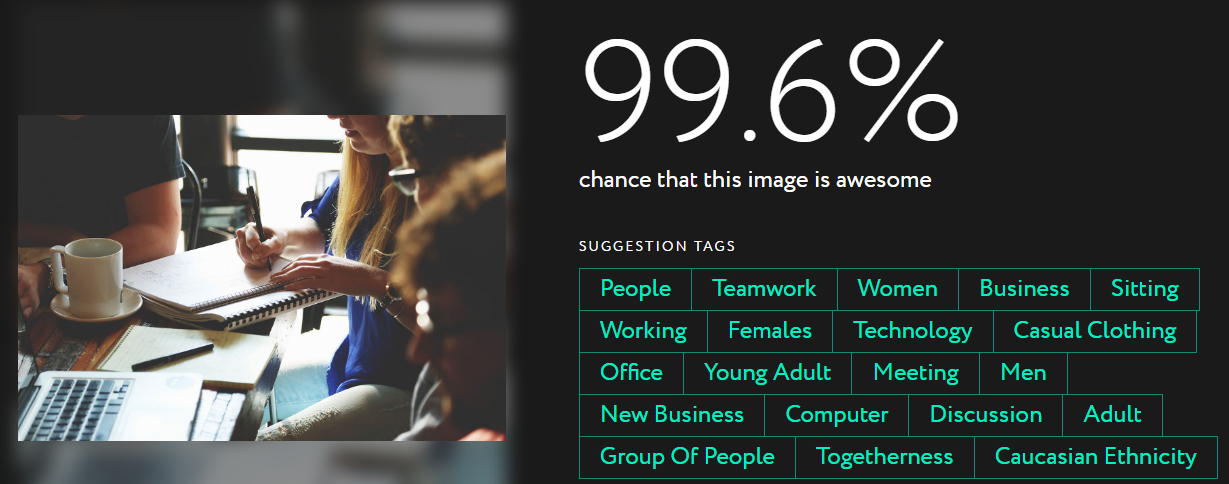

Once logged in, you can either upload or copy an image URL to Everypixel’s Aesthetics platform, and it generates a percentage of whether the photo is “awesome” or not. (Editor’s note: The platform is now live as of March 15).

After playing around with the software, it appears to this writer that your everyday, run-of-the-mill selfie gets a low score while images of landscapes, diversity, and some works of art receive higher scores.

A surprisingly cool and accurate feature of the platform is its suggested tags. It analyzes each image and suggests tags that are true to the image without erroneous labels.

Here is an example that Everypixel had on their website. As you can see it is very generic and business-oriented, and it gets a score of zero. I cringe to think how many stock photos I’ve used on The Sociable that are like-wise in aesthetics, but hey! that’s what happens when you have to deal with licensing issues.

Compare the above image with another stock photo that Everypixel had on file and you can get a glimpse as to some of the factors the algorithm takes into account, especially when analyzing the suggested tags that are generated. They seem to favor diversity, ethnicity, and togetherness.

By now, you may be wondering how it actually works, rather than me speculating based on a few primitive tests. I’ll let the experts speak for themselves.

To develop its algorithm, the team at Everypixel asked designers, editors and experienced stock photographers to help generate a training dataset.

They tested 956,794 positive and negative patterns, and their “‘Heartless algorithm’ learned to see the beauty of shots in the same way as you do.”

The neural network would estimate a visual quality of every image and apply aesthetic score to every file. Later on this data would take part in the overall mix of ranking factors and help improve search results by bringing aesthetically better images to the first pages.

In a nutshell, there is solid science and methodology behind the algorithm, and it is accurate in evaluating what it was designed to do, measure the “awesomeness” of stock images.

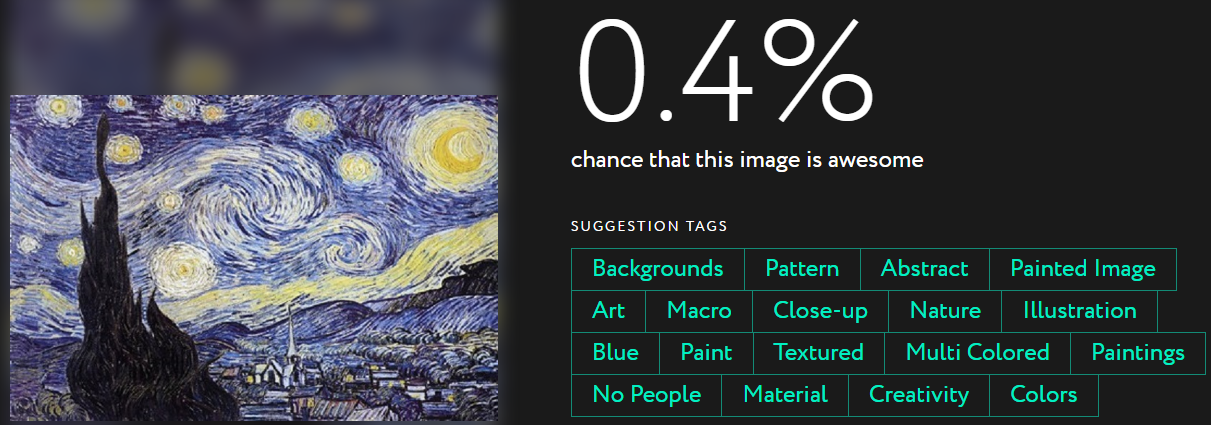

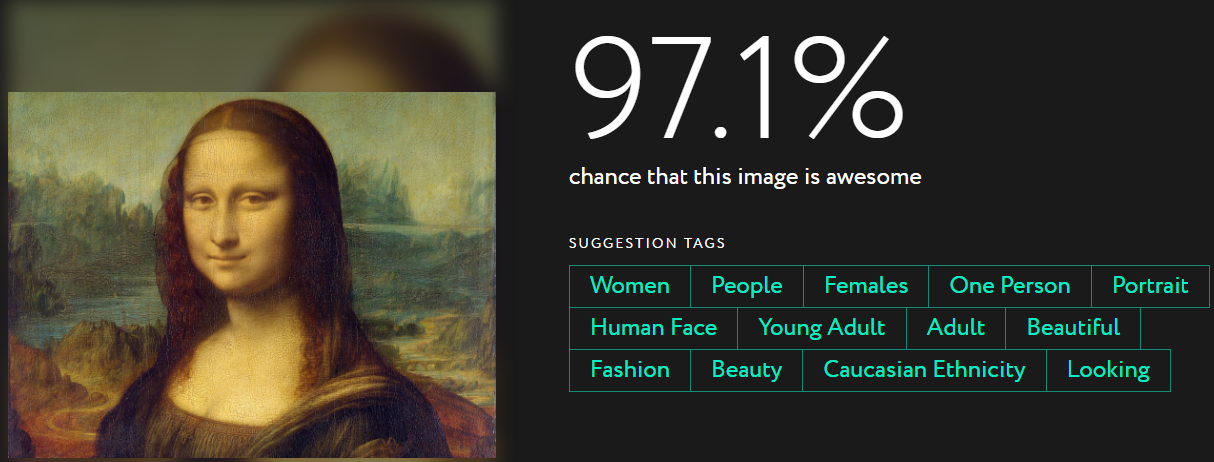

Out of curiosity, I wanted to test it on well-known works of art.

Here’s a quick comparison between the “awesomeness” of Van Gogh’s Starry Night and Da Vinci’s Mona Lisa, with the understanding that the algorithm was designed specifically to analyze stock photos and not well-known masterpieces.

The platform may be biased when it comes to works of art, but for analyzing stock images, it’s pretty spot-on.

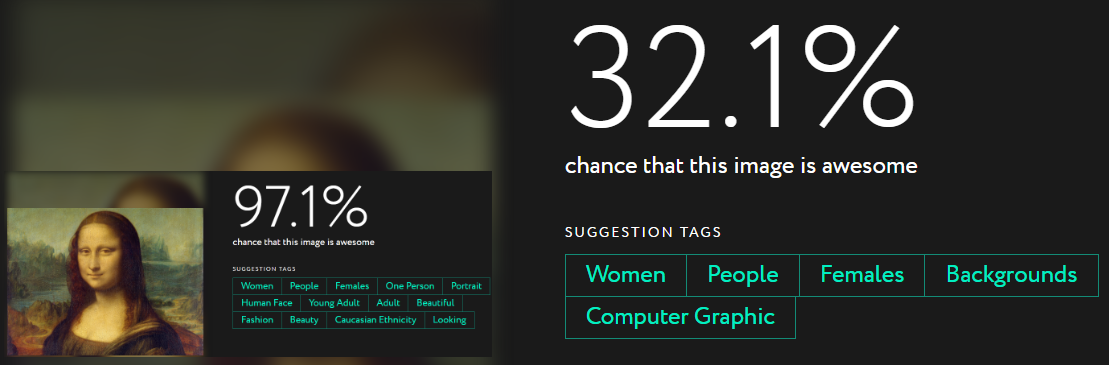

Now, let’s venture into the twilight zone. What if we analyze the awesomeness of the image that was just analyzed for awesomeness? In other words, what does this above image of the Mona Lisa score when it is plugged back in the same form as above like a Russian doll?

Bingo! The awesomeness quality lowers each time the image is recycled, which is exactly what it should do. Kudos!

Editor’s Note: This article was updated on March 16 to reflect that the beta version went live on March 15.