A US Department of Homeland Security official testifies that facial recognition technology is very accurate and that it doesn’t see false positives that misidentify people at US ports of entry, but that there are issues with matching photos to databases.

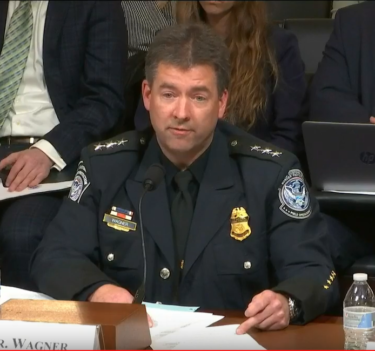

“We are not seeing false positives that’s matching you to a different identity” — Department of Homeland Security Officer John Wagner

Today the House Committee on Homeland Security revisited the issue of facial recognition at US ports of entry with a hearing called “About Face: Examining the DHS’ Use of Facial Recognition and Other Biometric Technologies, Part II.”

John Wagner, Deputy Executive Assistant Commissioner, Office of Field Operations, US Customs and Border Protection (CBP), US Department of Homeland Security (DHS), testified today that facial recognition technology used at air, land, and sea ports of entry did not misidentify people.

John Wagner

“It’s not that they’re misidentified; it’s just that we didn’t match them to a picture in the gallery that we did have of them”

“We are not seeing false positives that’s matching you to a different identity. We’re not seeing that with this technology. More likely you don’t match against anything. So, we’re seeing a ‘no information’ return,” he told the Committee.

That’s not to say that the process isn’t flawed. One of the biggest problems the DHS and CBP face is not coming up with a match to the face that they are scanning.

“Facial comparison technology can match more than 97 percent of travelers through the creation of facial galleries”

This can be attributed more to camera quality, lighting, and the age of their passport photo than anything that’s in the algorithms.

If there are technical issues with the cameras or if the person is moving when getting their picture taken, these can produce “no information” returns.

“No information” returns have been shown to particularly affect African-Americans, Asian-Americans, children, and the elderly, according to the witnesses present at Thursday’s hearing.

“It’s not that they’re misidentified; it’s just that we didn’t match them to a picture in the gallery that we did have of them,” said Wagner.

“CBP compares traveler photos to a very small gallery of high-quality images that those travelers already provided to the US Government to obtain a passport or visa”

“That’s where we look at the operational variables — the camera, the picture quality, the human behaviors when the photo was taken, the lighting — and then the age of the photo, and then what we’ve seen [is that] you’re gallery size impacts your match rate.”

In order to understand what Wagner is talking about, let’s take a look at how the technology and the process works.

The only photos taken for facial recognition are the ones that they take when they tell you to look in the camera. They then match the photo they just took to the picture that already exists in the database, which could be the same photo that’s in your passport.

If the picture that they take of you at the airport is blurry or has bad lighting, it might not be able to match you to the other photos (from official documents) that they have of you in the database.

How DHS, CBP Handles Data Security and Privacy Concerns

On the question of data security, Wagner explained the chain of events that occur when using facial recognition technology at US ports of entry.

“The photographs that are taken by one of our stakeholders’ cameras are encrypted, they’re transmitted securely to the CBP cloud infrastructure where the [photo] gallery is positioned.

“The pictures are templatized, which means they’re turned into some type of mathematical structure. They cannot be reverse engineered.

“And they’re matched up with the templatized photos that we’ve prestaged in the gallery. And then just a response goes back with ‘Yes’ or ‘No’ with the unique identifier,” he said.

When it comes to privacy, Wagner’s submitted in his written testimony:

- Biometric entry-exit is not a surveillance program. CBP does not use hidden cameras.

- CBP uses only photos collected from cameras deployed specifically for this purpose and does not use photos obtained from closed-circuit television or other live or recorded video.

- CBP uses facial comparison technology to ensure a person is who they say they are – the bearer of the passport they present.

- Travelers are aware their photos are being taken and that they can opt-out.

- Facial comparison technology can match more than 97 percent of travelers through the creation of facial galleries.

- To ensure higher accuracy rates, as well as efficient traveler processing, CBP compares traveler photos to a very small gallery of high-quality images that those travelers already provided to the US Government to obtain a passport or visa.

- For US citizens, the photo they take at the point of entry is deleted within 12 hours.

- For foreign nationals, the photo that they take at the point of entry is deleted after two weeks.

“Foreign nationals may opt out of providing biometric data to a third party, and any US citizen or foreign national may do so”

According to Wagner, “When airlines or airports partner with CBP on biometric air exit, the public is informed that the partner is collecting the biometric data in coordination with CBP.”

“We notify travelers at these ports using verbal announcements, signs, and/or message boards that CBP takes photos for identity verification purposes, and they are informed of their ability to opt-out.

“Foreign nationals may opt out of providing biometric data to a third party, and any US citizen or foreign national may do so at the time of boarding by notifying the airline-boarding agent that they would like to opt out.”

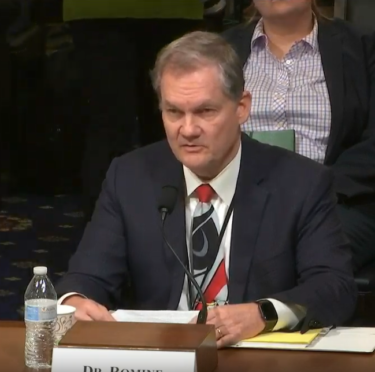

Chuck Romine

To get another perspective on how facial recognition technology works, Chuck Romine, Ph.D., Director of the Information Technology Laboratory, National Institute of Standards and Technology, US Department of Commerce, testified that:

- Face detection technology determines whether the image contains a face.

- Face analysis technology aims to identify attributes such as gender, age, or emotion from detected faces.

- Face recognition technology compares an individual’s facial features to available images for verification or identification purposes.

“Verification or “one-to-one” matching confirms a photo matches a different photo of the same person in a database or the photo on a credential, and is commonly used for authentication purposes, such as unlocking a smartphone or checking a passport,” stated Romine in his written testimony.

“Identification or “one-to-many” search determines whether the person in the photo has any match in a database and can be used for identification of a person.”

Keeping Civil Rights and Ethics in Mind

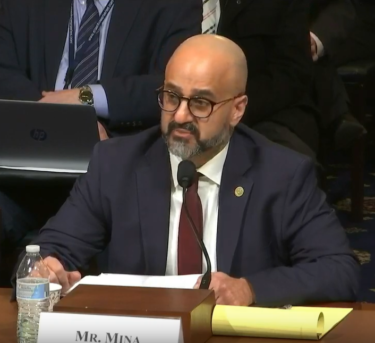

Peter Mina, Deputy Officer for Programs and Compliance, Office for Civil Rights and Civil Liberties, US Department of Homeland Security outlined in his testimony that the DHS is working with other organizations to ensure that people’s civil rights aren’t violated.

Peter Mina

“Operators, researchers, and civil rights policymakers must work together to prevent algorithms from leading to racial, gender, or other impermissible biases in the use of facial recognition technology”

According to Mina, “DHS partnered with the National Institute of Standards and Technology (NIST) on the assessment of facial recognition technologies to improve data quality and integrity, and ultimately the accuracy of the technology, as a means of eliminating such impermissible bias.”

Below are some of the steps that have been taken to protect travelers from faulty facial recognition systems.

- The Office for Civil Rights and Civil Liberties (CRCL) has been and continues to be engaged with the DHS operational Components to ensure use of facial recognition technology is consistent with civil rights and civil liberties law and policy.

- Operators, researchers, and civil rights policymakers must work together to prevent algorithms from leading to racial, gender, or other impermissible biases in the use of facial recognition technology.

- Facial recognition technology can serve as an important tool to increase the efficiency and effectiveness of the Department’s public protection mission, as well as the facilitation of lawful travel, but it is vital that these programs utilize this technology in a way that safeguards our Constitutional rights and values.

- Currently, the DHS Office of Biometric Identity Management (OBIM) is partnering with NIST to develop a face image quality standard that will improve the accuracy and reliability of facial recognition as it is employed at DHS.

- CBP is partnering with NIST to analyze performance impacts due to image quality and traveler demographics and providing recommendations regarding match algorithms, optimal thresholds for false positives, and the selection of photographs used for comparison.

ACLU files FOIA request demanding DHS, ICE reveal how they use Amazon Rekognition