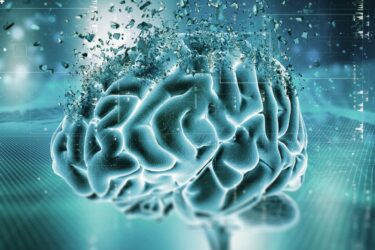

We have seen the hype, we know that AI could do amazing things. In the future it could control many aspects of our lives, revolutionize industries and maybe even cure cancer, but we know very little about how it actually works.

For example, when Google’s subsidiary DeepMind decided to pit AI against itself for a “social dilemma”, the internet was fascinated with the results, but even the greatest AI experts were unsure of how to explain them. In the game – known as “prisoners dilemma” – both AI players had the option to betray the other to win, but if both opted for betrayal, they both lost.

The experiment found that “AI agents altered their behaviour, becoming more cooperative or antagonistic, depending on the context”. When they had a common enemy they decided to co-operate, but when resources were scarce, betrayal was often the best option.

The issue is, as interesting as these results are, nobody is entirely sure why they came about. It seems to reflect a rational human self-interest, but the step by step decision making behind this is unclear and has provoked much online debate. We cannot understand the AI, and the AI cannot explain itself, so for now we will never know the exact exactly what the AI thinking when it decided to sacrifice its fellow AI for a virtual prize.

Understanding AI Decision Making

The game analogy illustrates a huge obstacle for the development of AI. To develop truly ground-breaking AI, we need to understand its decision making. And to understand its decision making, AI needs to understand itself.

For this reason, Ulsan National Institute of Science and Technology (UNIST) in South Korea, has launched a program to use the power of AI, in order to understand it.

The program – “Next-generation AI Technology’, or “Next-generation Learning·Sequencing” – is part of their prestigious National Artificial Intelligence (AI) Strategic Project.

The project essentially seeks to use AI to understand how human decision making works, which in turn will mean we can understand the decision making of AI.

The aim, said Professor Choi, is to “develop AI systems that explain how they arrive at their decisions that are based on real-world data”.

The belief is that this development “will help apply AI in specialized fields, such as clinical diagnosis and financial transactions, that are in need of greater transparency”. For these reasons they have managed to secure KRW 15 billion (13M USD) from the Korean government.

The reason why this tech is pivotal to those specific fields, is that when deciding whether to take on a big stock trade, or an incredibly risky cancer operation, we need an explanation as to why. Just as you would be unlikely to let someone gamble your money on the stock market or perform an incredibly risky medical procedure on yourself without a rational justification, you would be unlikely to trust a robot.

It is believed that once it can understand decision making, it can then “diagnose many diseases by analysing a patient’s medical records, including brain imaging and biometric data. Indeed, this will enable the early diagnosis of pancreatic cancer, as well as the Alzheimer’s disease”.

In these cases, transparency – which an understanding of decision making would provide – is also a huge asset.

In a purely commercial arena, this could be used – perhaps worryingly – to help predict future prices in the stock or natural resources market.