When we speculate about the potential threats surround AI, many of us may have flashbacks to The Terminator, trembling at the idea of a Skynet entity launch all the world’s most dangerous nuclear missiles.

While this is not an unrealistic fear, our perception of AI has often neglected how dangerous this technology can be when integrated with the right weaponry, utilized by terrorists or tyrannical governments, or any type of government for that matter.

Read More: ‘AI will represent a paradigm shift in warfare’: WEF predicts an Ender’s Game-like future

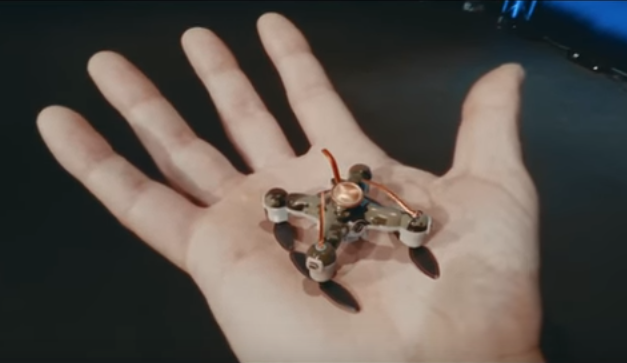

Slaughterbots, which presently reside in the realm of fiction (as far as we know) even though the technology exists, are autonomous killer robots which can be released in large swarms which use facial recognition to locate and kill their targets, as the below hypothetical video demonstrates in a very bleak future.

Prominent leaders within the tech community have been very vocal regarding the threat AI presents when equipped with the right technology. Back in August, Elon Musk expressed serious concern regarding A.I. and described it as a bigger threat than North Korea. And he is not alone.

Read More: AI-human hybrids are essential for humanity’s evolution and survival: Elon Musk

The tech giant Mike Lynch, described as Britain’s answer to Bill Gates, claims that 2018 will be the year of “machine-on-machine attack” as he described a worrying future of weaponized AI. Lynch, who is worth around £500 million, believes that machines will completely dominate the digital battlefield and humans won’t be able to stop them.

These two leaders in the tech industry are a part of growing number of individuals that fear weaponized AI. The Future of Life Institute—an AI watchdog organization that grew massively in popularity thanks to its campaign to stop killer robots— worked with UC Berkeley’s Stuart Russell to produce the “Slaughterbots.”

Stuart Russell

In an interview with thebulletin.org, Russell openly discussed his motivation to produce a video which could visually demonstrate the potential.

“It started with a thought that I had. We were failing to communicate our perception of the risks [of autonomous weapons] both to the general public and the media and also to the people in power who make decisions—the military, State Department, diplomats, and so on,” states Russell.

“So just to give you one example of the level of misunderstanding, I went to a meeting with a very senior Defense Department official. He told us with a straight face that he had consulted with his experts, and there was no risk of autonomous weapons taking over the world, like Skynet [the runaway artificial intelligence from the Terminator movies]. If he really had no clue what we were talking about, then probably no one else did either, and so we thought a video would make it very clear,” he adds.

Worryingly, it appears this technology is on the precipice of being produced, and 2018 could indeed be the turning point, with Russell stating that “it would take a good team of PhD students and post docs to put together all the bits of the software and make it work in a practical way.”

However, there are certain methods that can be employed to ensure this threat can be controlled, to some extent anyway.

“We have a chemical weapons treaty. Chemical weapons are extremely low-tech. You can go on the web and find the recipe for pretty much every chemical weapon ever made, and it’s not complicated to make them—but the fact that we have the Chemical Weapons Convention means that nobody is mass-producing chemical weapons. And if a country is making small amounts and using them, like Syria did, the international community comes down on them extremely hard,” claims Russell.