There is no single right big data solution. Organizations are most likely to succeed with big data if they identify their own specific goals and requirements, and choose the mix of hardware and software that will best cater to their specific digital architecture.

However, in response to high market demand, there is now a dizzying array of big data products and services. The huge number of choices not only makes it hard for organizations to find the right tools for their needs, but can also lead to inflated costs and suboptimal performance outcomes.

This is further compounded as transparent industry standards and benchmarks for big data products have only entered the picture more recently. Solution providers are often forced to run ad-hoc benchmarks, yet don’t necessarily relate to what the eventual workload will look like.

However, a growing number of independent benchmark organizations are working to change this, providing frameworks by which companies can prove the value of their solutions and organizations can access and objectively compare big data systems.

SQream, a data acceleration platform, recently participated in the TPC Express Big Bench tests from the nonprofit Transaction Processing Performance Council (TPC) to test the performance of its data lakehouse solution known as SQream Blue.

The results of the test smashed existing benchmarks on several fronts, signaling a new era of high-performance big data tools with the power to help organizations unlock more from their big data projects.

Tackling unstructured data at lightning speed

The TPCx-BB testing system provides clarity on the performance capabilities of big data solution providers and their offerings.

Mock scenarios with set parameters provide a benchmark to test against that helps organizations make more informed decisions and understand which big data tools are likely to perform the most effectively for their challenges or data goals.

In recent years, many enterprises have reported that costs associated with running their big data projects are becoming unsustainable. In June SQream surveyed hundreds of enterprises for its State of Big Data Analytics Report and found that a massive 92% of companies surveyed are actively aiming to reduce cloud spend on analytics. Meanwhile 71% regularly experience ‘bill shock’ and 41% list high costs as the primary big data challenge.

However, SQream Blue, the company’s data lakehouse solution, far exceeded current cost standards during the Big Bench testing with remarkable results.

While some solutions offer incremental improvements, SQream Blue’s prowess at data processing tasks is leaps and bounds ahead. The tool was able to handle 30TB of data three times faster than Databricks’ Spark-based Photon SQL engine. The huge reduction in processing time was also proven to unlock huge cost savings, which came in at a third of the price.

Matan Libis, VP Product at SQream, expanded on this further, adding: “In cloud analytics, cost performance is the only factor that matters. SQream Blue’s proprietary complex engineering algorithms offer unparalleled capabilities, making it the top choice for heavy workloads when analyzing structured data.”

SQream Blue’s total runtime was 2462.6 seconds, with the total cost for processing the data end-to-end being $26.94. Databricks’ total runtime was 8332.4 seconds, at a cost of $76.94.

To put this into perspective, the performance of SQream Blue is equivalent to reading every cataloged book in the US Library of Congress in under an hour – and then buying them all for less than $25.

Given the enterprises surveyed for SQream’s report typically handle over 1PB of data, such sizeable efficiency gains are set to translate into a huge reduction in costs.

Aiding intensive AI data preparation tasks

In order to reach these results, SQream Blue was used to tackle several data processing tasks typically encountered in real-world scenarios, such as building a model to predict the purchase decisions of online shoppers based on available historical data.

To test the relative capabilities of SQream at scale, the benchmark analysis was run on Amazon Web Services (AWS) with a dataset of 30 TB. Generated data was stored as Apache Parquet files on Amazon Simple Storage Service (Amazon S3), and the queries were processed without pre-loading into a database.

Even so, the tool was able to perform at breakneck speeds by dividing data processing between GPUs and CPUs to avoid unnecessary overhead, ensuring unprecedentedly rapid results and optimal performance.

“Databricks users and analytics vendors can easily add SQream to their existing data stack, offload costly intensive data and AI preparation workloads to SQream, and reduce cost while improving time to insights,” added Libis.

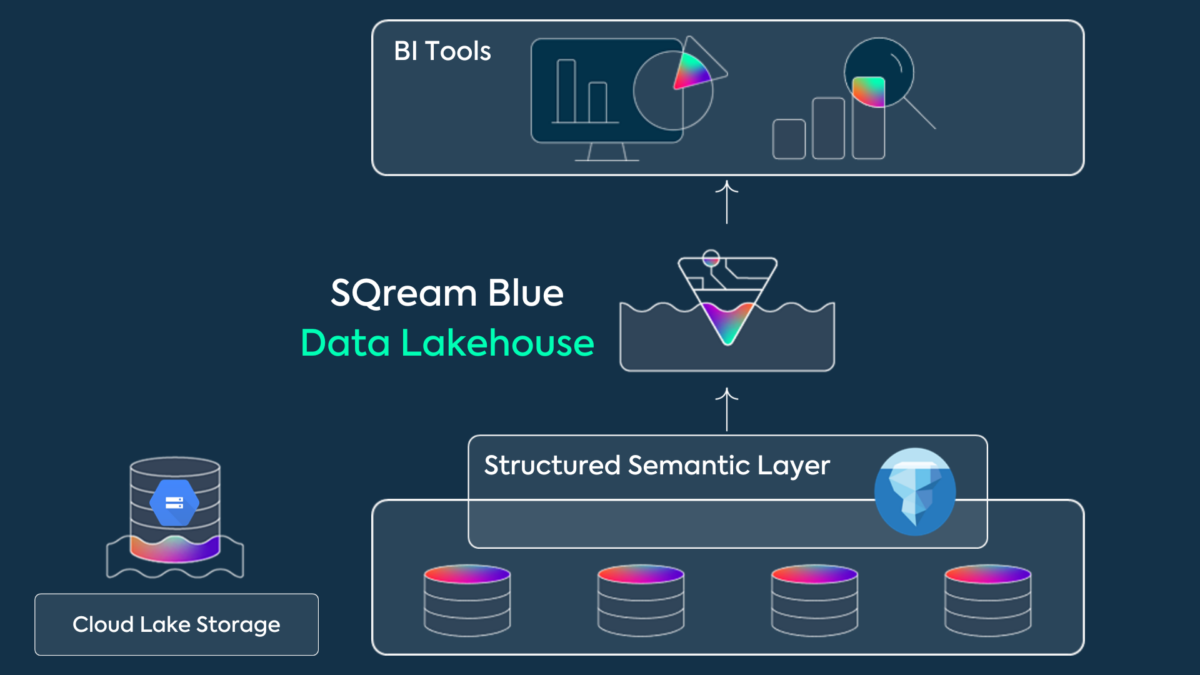

The solution’s architecture contributes to its streamlined efficiency, as data does not need to be moved at any point during the preparation cycle.

Rather, SQream Blue directly accesses data in open-standard formats at the customer’s low-cost cloud storage to maintain privacy and ownership, preserving a single source and eliminating the need for data duplication.

Innovating big data service delivery

Big data projects with out-of-control costs or lengthy delays are a major headache for enterprise organizations. More widely if these common challenges are left unchecked could see big data projects move out of favor.

SQream is responding to the widespread need for better data processing capabilities that complement other big data solutions in the enterprise tool kit. Available on AWS and Google Cloud marketplaces, SQream Blue is ready to bring these new service standards to big data projects worldwide.