The widespread adoption of deep learning models has launched artificial intelligence to earworm status. Everywhere you turn, there’s yet another newsworthy event with AI at its center – and understandably so.

Over the past few months, the evolving capabilities of ChatGPT (and its growing competition) have seduced the entire tech ecosystem, stealing some companies away from Metaverse-shaped lovers, and making some others obscene amounts of money.

The rise of AI-powered tools has brought with it a complex wave of excitement, apprehension, and even fear – as we watch the collective future of work, creativity, and productivity change forever. And according to OpenAI CEO, Sam Altman, we’re just scratching the surface.

In an interview with Connie Loizos for StrictlyVC earlier this year, Sam shared that the OpenAI team is fully aware of the potential economic impacts that its rockstar brainchild (ChatGPT) can, and probably will, have. As such, they’re committed to throttling the releases of better and smarter GPT models year-on-year to allow society to ease into a progressively new normal and hopefully iterate adequate guard rails.

This means that the version of ChatGPT that’s causing such a ruckus – open letters to slow down its development, bans in Europe, doomsday predictions, and so forth – isn’t anywhere near its final form.

“We’re very much here to build an AGI”

Sam went on to say when asked about more Microsoft-esque partnerships moving forward. “…products and partnerships are tactics in service of that goal.”

All of this indicates that we’re galloping toward a future where Artificial General Intelligence (AGI) is a reality and not just a cool Sci-Fi trope. A future that, as Sam Altman puts it, could see an increase in abundance, a turbocharged economy, and new scientific discoveries that would change the limits of possibility.

However, it’s a future that also comes with a potent risk of misuse, drastic accidents, and societal disruptions.

A blessing and a curse, if you will.

Regardless of which way the pendulum swings, we can safely predict that people are going to be living, and working, very differently.

As AI reshapes the workforce, questions about compensation or a “plan for society” have come to the fore. After all, it is our collective data that is fed to these models, even though it becomes something unrecognizable after being ingested.

Should We Get Paid for Our Contributions to AI Data?

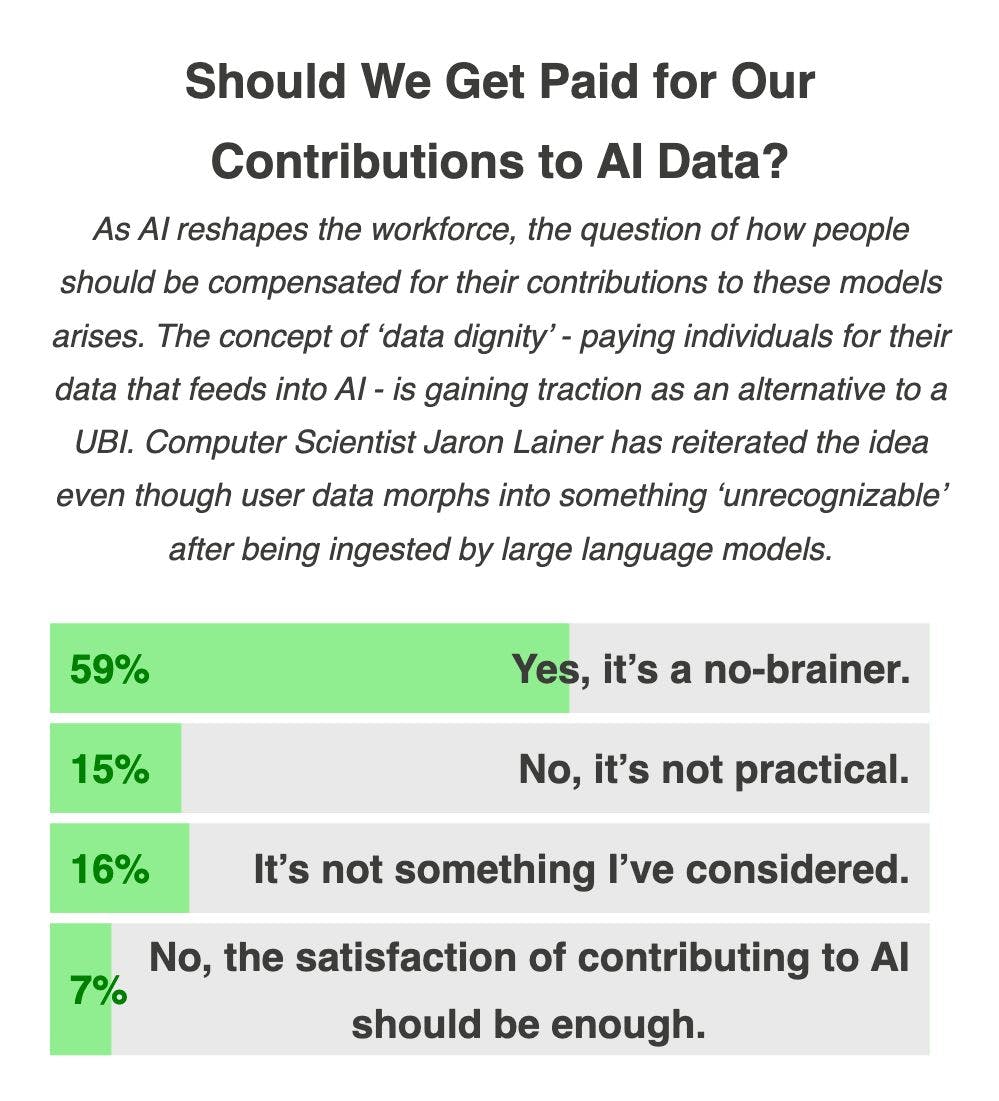

In a recent HackerNoon poll, we asked our community of 4M+ monthly readers if they thought we should be getting compensated for our data contributions to AI models.

Unsurprisingly, a 59% majority voted yes.

22% had a different take on things, as 15% of them expressed some reservations about the practicality of compensation at scale. The 7% left over were absolutely thrilled about the idea of contributing to AI and they believe that it’s something we should all be ecstatic about, as it contributes to the advancement of humanity.

The full poll result and comment section can be found__here.__

What would this payment look like?

This is where things get a little tricky.

It’s been widely speculated that implementing a UBI, which ensures a reliable and consistent lifeline for individuals, is the ideal solution to support people as they navigate shifting career paths in this AI-driven era. However, Sam Altman thinks a UBI is only a little part of the solution.

I think it is a little part of the solution. I think it’s great… But I don’t think that’s going to solve the problem. I don’t think that’s going to give people meaning, I don’t think it means people are going to entirely stop trying to create and do new things and whatever else. So I would consider it an enabling technology, but not a plan for society.

Computer Scientist, Jaron Lanier, sees a concept called “Data Dignity” as a potential road to equitable compensation at scale. According to Lanier, a data-dignity approach would identify the most unique and influential sources of inspiration when a big data model produces valuable output.

For instance, if you ask a model for “an animated movie of my kids in an oil-painting world of talking cats on an adventure,” then certain key oil painters, cat portraitists, voice actors, and writers—or their estates—might be calculated to have been uniquely essential to the creation of the new masterpiece. They would be acknowledged and motivated.

For this to work, Lanier believes that the “black-box” nature of our current AI tools must end. We need to know what’s under the hood and why. He also points out that a UBI is in some ways a scary idea.

U.B.I. amounts to putting everyone on the dole in order to preserve the idea of black-box artificial intelligence. This is a scary idea, I think, in part because bad actors will want to seize the centers of power in a universal welfare system, as in every communist experiment.

Final Thoughts

As we figure out a “plan for society” and settle into the “Age of AI”, one thing is clear – there’s intrinsic value in offering people some ownership over their work, even in cases where the output is transformed by the extensive processing of a large language model. The debate around compensation underscores the need to assess the future of work and address the ethical and economic implications of collective data utilization.

This article was originally published by Asher on Hackernoon.