Imagine a company interviewing a candidate for a senior IT role. The résumé checks out, the background looks solid, and the person even passes multiple video interviews. It wasn’t until the new Mac that the employee received was immediately being loaded with malware that suspicion arose.

After a thorough investigation, the shocking truth comes out: the “engineer” was a deepfake, part of a sophisticated operation using AI to infiltrate a hiring process.

“It’s no longer just about breaking into systems; attackers are targeting humans, and AI makes it easier than ever,” says Sanny Liao, co-founder and chief product officer at Fable Security. With AI-powered attacks on the rise, small and mid-sized businesses are facing threats that can mimic employees, clone voices, and exploit rapidly adopted AI tools.

The numbers tell the story. In 2024, 90% of U.S. companies reported being targeted by cyber fraud, with AI-driven attacks — from deepfakes to voice phishing — surging 118% year over year. While businesses are learning how to leverage AI, attackers are becoming even quicker at weaponizing it.

Cybersecurity experts are discovering dozens of ways AI is assisting attackers in increasing sophistication and creativity. On the latest episode of Brains Byte Back, Liao walks host Erick Espinosa through the top three AI-driven scams business leaders need to watch in 2026 and shares practical steps to stay ahead.

1. Synthetic Identities and Fake Employee

Fake résumés and forged references have long been a concern, but AI has taken the threat to a new level. Entire fake employees can now be created with convincing IDs, references, and even live video interviews that don’t glitch.

“The AI technology has gotten so good that it’s almost impossible to tell who’s fake over Zoom or a phone call,” Liao explains.

Remote-first companies and those hiring internationally are especially vulnerable. Once inside, a fake employee is treated like any other team member — gaining access to sensitive systems and critical data. Liao recommends a simple but powerful measure: in-person verification.

“Anyone doing remote hiring needs in-person identity verification. That’s the only reliable checkpoint left,” she says.

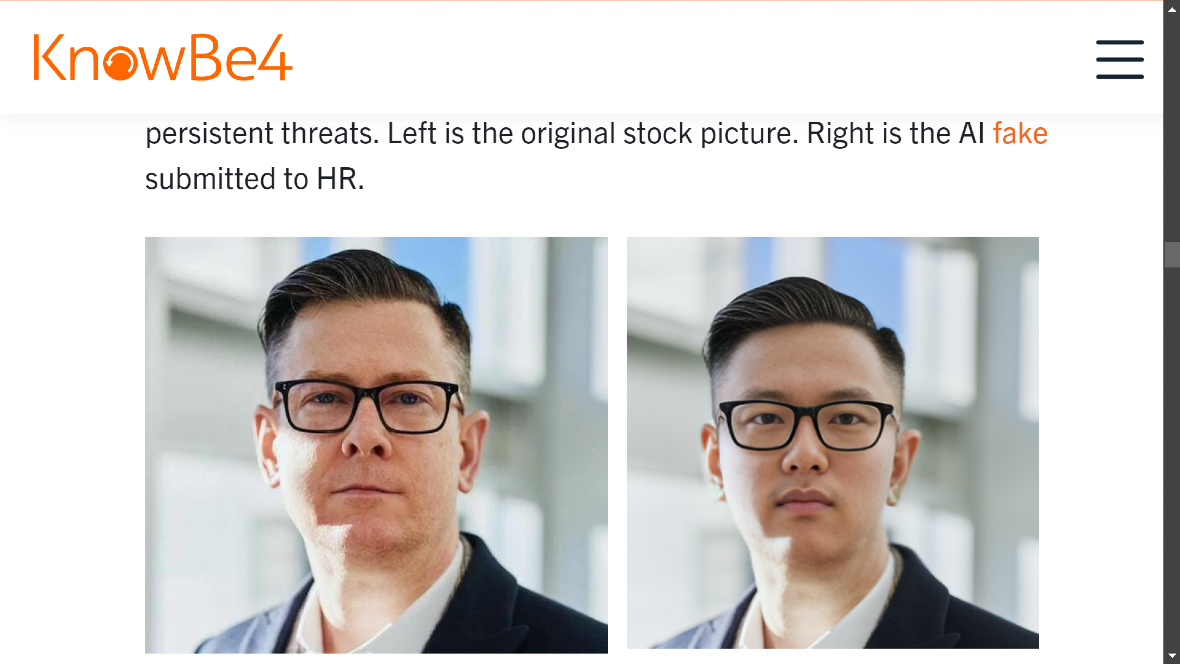

Liao referred to the previous KnowBe4 example, in which the company unknowingly hired a North Korean operative.

¨This is a well-organized, state-sponsored, large criminal ring with extensive resources,¨says KnowBe4 on their post detailing what unfolded.

¨The case highlights the critical need for more robust vetting processes, continuous security monitoring, and improved coordination between HR, IT, and security teams in protecting against advanced persistent threats.¨

While this story made headlines, Liao points out that fake employees seeking remote work have become a common occurrence.

¨We actually talked to a bunch of security leaders that we knew, and what shocked me was that about half of the security leaders I talked to told me that this is not new. We are already being targeted by this,¨ added Liao.

The stakes go beyond payroll fraud. Attackers can manipulate internal systems or exfiltrate proprietary information, turning a single fake hire into a company-wide breach.

2. AI-Generated Social Engineering

Phishing used to mean clunky emails riddled with typos. Now, AI has made social engineering smarter, faster, and more personalized. Attackers can research a company’s tech stack, identify high-value employees, and even clone voices to impersonate trusted colleagues.

A striking example is the ShinyHunters attacks on companies, including Google, Adidas, and Workday. Using AI-driven voice phishing, attackers convinced employees to install malicious apps on Salesforce, exposing client data and sensitive internal systems.

“Attackers can now figure out which companies use which tools almost instantly. It’s knowledge on tap,” Liao says.Her advice is straightforward: if an unexpected call comes from someone claiming to be IT or HR, hang up and call back using a verified company number. These attacks aren’t confined to phones either; Slack, WhatsApp, and other communication platforms are also being targeted.

“Attackers follow the platforms you use. If you’re on Slack, they’ll try Slack. If it’s Zoom or WhatsApp, they’ll shift there,” she notes.

Liao advises that if you receive a call from anyone claiming to be IT or HR, use caution by hanging up and contacting them from a designated number that reassures that you are connecting with someone legitimately from that department.

3. Insecure AI Adoption

The rush to adopt AI tools for automation, coding, and productivity is creating new vulnerabilities. Startups and enterprise teams alike are exposing themselves to risks that never existed before, from malware hidden in open-source packages to accidental data leaks into public AI models.

“AI is new, and everyone wants to move quickly. But the pace of adoption is amplifying vulnerabilities by design,” Liao warns.

A high-profile case in 2023 involved a Samsung developer who uploaded the company’s codebase into a personal AI tool that used the data for public training — effectively exposing proprietary information.

Even enterprise AI products can introduce gaps if used without proper oversight.

Liao says this is a cautionary example that reflects the unintentional errors that can happen when using these types of AI systems. The challenge is not just malicious actors; human error in a fast-moving AI landscape creates blind spots that attackers can exploit.

Companies need AI to stay competitive, but they must do so deliberately, with security and governance prioritized throughout the process.

Protecting the Human Factor

Attackers aren’t just hacking tools anymore; they’re targeting people. “At the end of the day, if they can get around your tools and get to your people, they win,” Liao emphasizes.

For business leaders, the takeaway is clear: verify identities in hiring, train employees to recognize AI-powered social engineering, and adopt AI tools cautiously with security oversight in place. Staying ahead isn’t about avoiding AI; it’s about understanding how it can be weaponized and preparing your team for the ¨what ifs¨.

Companies will continue to leverage the technology to grow, and attackers will continue to harness it to exploit weaknesses in ways that are faster, smarter, and more convincingly than ever.

You can listen to the full episode on Spotify, Anchor, Apple Podcasts, Breaker, Google Podcasts, Stitcher, Overcast, Listen Notes, PodBean, and Radio Public.

Find out more about Sanny Liao here.

Learn more about Fable Security here.

Reach out to today’s host, Erick Espinosa – [email protected]

Get the latest on tech news – https://sociable.co/

Leave an iTunes review – https://rb.gy/ampk26

Follow us on your favourite podcast platform – https://link.chtbl.com/rN3x4ecY

TRANSCRIPT:

Sanny Liao: Hi, everyone. My name is Sanny Liao. I am the co-founder and chief product officer at Fable Security. We are a human risk management solution that work primarily with enterprises to help prepare their employees for the latest threats that’s facing them.

Erick Espinosa: Sanny, thank you so much for joining me on this episode of Brains Byte Back. Sanny, and those of you listening, on Brainspite Back, we love to talk about how companies are using AI to automate work, basically to make things a lot easier. But I feel like what we don’t talk about enough is how attackers are using those same tools. And this conversation matters in today’s world where AI is just not influencing our work, but I guess our sense of security is the best way to put it.

We’ve heard of the scams hit the news, and I mentioned this to you, Sandy, about like the most typical scams, personal scams like grandfather, people using AI voices to mimic grandchildren. That’s happening on the personal side of things. But at the same time, in the enterprise and startup world, they are having the ability to basically gather information, mimic trusted people’s voices, and probe for weak spots.

And this is happening really, really fast. Some teams still think that AI scams are something that only hit big enterprises, but we’re actually discovering that the reality is that small and mid-sized companies are easier for targeters or attackers to study, impersonate, and manipulate.

So today we’re going to focus on the three threats that you see as the fastest growing, Sandy, some that have made some global headlines, and as well, we’re going to be talking about basically the common things that startups should be focusing on and what signals founders should pay attention to when it comes to these types of scams.

But before we dive into that, Sandy, I’d love to learn a little bit more about your background. Can you give us some insight into what kind of led you into fraud prevention and identity risk?

Sanny Liao: Yeah, absolutely. So I actually started my career as a PhD researcher in economics. After I got my PhD, I kind of got to the point where I really wanted to make things that people can feel, can really have real-world impacts.

So at the time, I was going to school at Berkeley, and I ended up working with this small ad tech startup out of San Francisco called Telepar. That’s where I actually got introduced to, oh my God, for the first time, I realized the power of technology, how it can actually change our lives.

And I remember one of the thoughts that I had at the time was this ad tech technology was so powerful beyond kind of any of us actually even realized, but it’s a pity. It’s being used to get people to click on ads.

So a couple of years after that, a few of my friends went off to start a cybersecurity startup called actually Abnormal Security. And I knew nothing about email security. It was just a group of people that I really, really enjoy working with. And I thought, this sounds good, right? We can figure out email. Like how hard could it be? It ended up being actually one of the best, I think, decisions I’ve made in my life.

So I ended up with that team. We built Abnormal Security from the very ground up.

Started with literally just 10 of us in the back of our VC’s office trying to figure out what BEC meant. BEC stands for Business Email Compromise.

To Abnormal now, obviously, it’s a very successful cybersecurity startup. I think one of the fastest, actually fastest growing cybersecurity startup in the world right now.So that was actually my kind of introduction into cybersecurity.

The moment I knew I wanted to stay in cyber forever was at the time I had a four-year-old child.

And he asked me, he’s like, Mom, what do you do in your job? And I’m there thinking, how do I explain email security to a four-year-old?

So I said something along the line of, there are bad guys out there who’s trying to steal people’s money and stuff. Mom stops the bad guys on the internet. And he stopped for a second. He said, like Batman?

That was the point. I’m like, okay, if my child thinks that I’m Batman when I go to work, I think I’m doing something right in life. But that was my start in cyber.

Erick Espinosa: But you’re helping a lot of businesses in a way. It’s kind of like, I don’t want to say good versus evil, but obviously you’re stepping up the game of a lot of these companies in terms of trying to protect them. And right now, I mean, you got into it when tech was a thing, but now tech is a necessity, and especially when it comes to AI.

But when did you start noticing that, I guess first notice that AI started changing how attackers are behaving?

Sanny Liao: Yeah, this actually started even toward the end of my time at Abnormal. So Abnormal Security, what they did is that they stopped sophisticated email-based threats. And we actually started seeing, this is around kind of 2022-ish, that email-based threats started evolving very quickly from, we used to see a lot of bad grammar used in the emails. There’s bad formatting. It’s very easy to tell, find some of these tales of email-based attacks to all of a sudden over the course of a year, all the grammar mistakes and all the spelling errors just disappeared.

And it was this huge, actually, increase in both the scale of attacks as well as the sophistication and their ability to bypass a lot of the detection tools.

So that was kind of already at that point, we started seeing that, hey, attackers are in fact adopting AI. They’re one of the first people to adopt AI, and they’re going to actually, they’re going to evolve just as fast as the AI technology is evolving. That was the beginning of it.

And the reason we actually left Epnormal to start Fable Security was, if you remember in 2023, when the MGM resource attack happened, that actually happened over voice phishing, right?

So someone caught up MGM resource IT helpdesk and actually bypassed their MFA, got into a system, deployed ransomware. And that was a case where we realized that, like, wow, attackers are now actually able to target precisely, right? They knew exactly who to target, who this guy was, who had the super admin privilege.

They were able to get in, target him, execute a very successful voice phishing attack. So it’s not something you could have stopped with any of the tools that most companies had. And that was the point we realized that, we’re like, hey, I think there’s something here, right? Attackers are, at the end of the day, focused on the human.

How can they get into, how can they get around all the technical tools a company has and get to the human at the end? And that is happening beyond one surface area. It’s not just email anymore. There’s other ways that attackers are finding them. And AI has made it tremendously easy and powerful for attackers to do that.

Erick Espinosa: That kind of sets the stage thinking about, because when a lot of people think about when AI started becoming a thing, it was during the pandemic. And then that’s when people were working from home.

So there’s this relationship in terms of when the technology started kind of like, thriving and growing really fast. And then when people were actually doing things at home and it became this kind of like this relationship in order for them to basically kind of open doors and access companies in other ways.

And I found this quote here. It’s kind of interesting. It speaks to last year. It says, in 2024, 90% of US companies reported that they had been targeted by cyber fraud and generative AI tactics, such as deep fakes or deep audio, rose 118% year over year. So that number kind of is, I mean, it’s mind-boggling.

And you think now, even just this year, when you’re seeing deep fakes, I think Banana was the one that I just saw most recently. And I just started playing around with Sora and the fact that it looks so real, it’s really hard for people to be able to differentiate what’s real and what’s fake.

But apparently with that same study, it says, despite the growth and success of cyber fraud methods, most executives report confidence in their, basically 90% confidence in themselves and 89% confidence in the ability of their employees to spot deep fakes, business email compromise that you mentioned, the scams, and other advanced fraud attacks.

I feel like that’s a high number that they’re very confident. But do you think that number reflects, I guess, the companies that you’ve connected with or that you found that you feel like maybe they’re starting to realize, no, actually there’s a problem here?

Sanny Liao: Yeah, I think sometimes people don’t know what they don’t know, right? So you can only speak to the attacks that you may have detected, but not necessarily ones that have bypassed. It’s interesting that you talked about deep fake as something that a lot of companies deal with.

If you remember last year when KnowBe4 talked about how they accidentally hired a North Korean fake IT worker, so of course it made headlines, you should feel free to look it up. It’s actually quite crazy that a North Korean fake IT worker basically pretended to be a software engineer and got hired by a security company.

So when that happened, we actually talked to a bunch of security leaders that we knew, and what shocked me was that about half of the security leaders I talked to told me that, oh, this is not new. We are already being targeted by this.

There was one company where they have a very strong brand. They’re really kind of out there in the news quite a bit. They even said that they actually they’re hit by this type of attack almost every single month. And what we saw was that the more a company has remote workers, which is something that we’ve seen really changing a lot in the last couple of years, the more they are vulnerable to these type of fake identities and fake employee attacks.

Erick Espinosa: So that actually leads to the first threat, which was synthetic identities and fake employees. And like you mentioned, apparently it’s been around for years. But what is really changing now?

I imagine with this type of thing that most of the companies that would be targeted would be IT companies because a lot of them work with employees across different countries, right?Or in different regions. What do you think is changing right now that’s making it even more of an issue?

Sanny Liao: Yeah, I mean, it’s just as attackers, actually, they are creatures of opportunity, right? So with the introduction of AI, what it has actually changed is that it made it very, very easy for attackers to execute on a fake employee type of attack. A lot of companies, right, if they are hiring more workforces, they’re really relying on a couple of verifications to figure out who these people are, right?

They’re looking at their resumes. Maybe they’re doing a background check. But all those things are actually very easy things to deepfake now with the assistance of AI. The Nobi, for example, was a good one where they actually went through all the vetting, right?

They even looked at this guy’s driver’s license, but all of that was deepfaked. And that, of course, led to actually a huge incident because once the attackers are in, they now are treated as a normal employee.

They have access to your data, to your systems. So in the least worst case, they’re doing it because they’re trying to kind of make money, right? By having, you know, pretending to be someone else to get a job. But in the more egregious cases, they’re getting in there because they want the access to your systems.

They want access to your data. So it’s, you know, it’s basically like the, it just, we, AI made it a lot easier for attackers to successfully execute on this type of attack.

Erick Espinosa: And with that information, I guess they could do ransoms. They could ask, obviously, for large amounts of money. What checkpoints, I guess for a smaller business, what type of checkpoints do you think they need to focus on to have them catch cases like this that can potentially be, I guess, somebody posing as somebody that they’re not to potentially get a job?

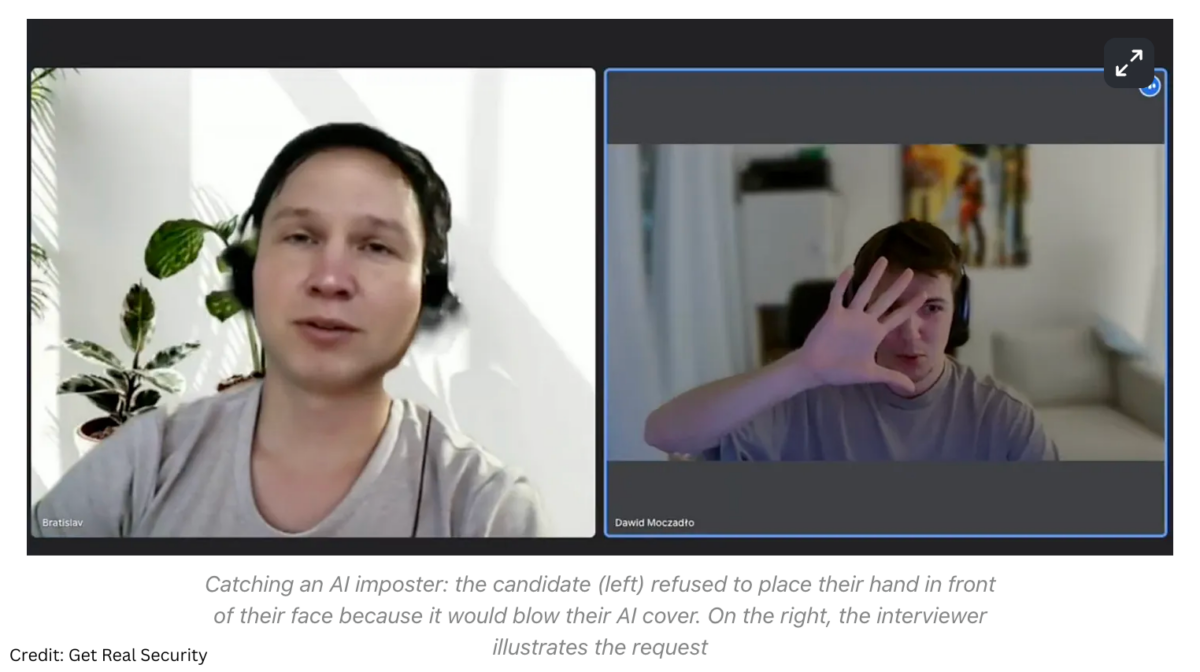

Sanny Liao: Yeah, yeah. So I would actually recommend for these cases, the AI technology has gotten so good that it’s almost impossible to completely tell who’s a fake person versus a real person over Zoom or phone call, right?

Even before, if you remember like a year ago, if you actually had someone move their face, put a hand over their face, a lot of the AI technology then they start kind of glitching out. That’s actually no longer the case.

The AI technology has gotten so good that that doesn’t really cause an issue anymore. So I would actually recommend that anyone who’s doing remote hiring, you need to actually have a process where you’re doing in-person verification of identity.

Erick Espinosa: Okay. So like physically have somebody, I guess maybe hire somebody in, I don’t know, like a trusted third party maybe to go and meet the person, collect documents, things like that?

Sanny Liao: Exactly.

Host (Erick Espinosa): Okay. That’s smart. And then the other one that you’d mentioned here is AI generated social engineering. Can you explain what that is exactly, what that looks like in picture?

Sanny Liao: Yeah, absolutely. So when most people think about social engineering, they are thinking about a email they receive, right? Or a WhatsApp they receive where they are being kind of tricked into clicking a link, you know, send a gift card somewhere, etc.

But what attackers have actually figured out is how to use AI in very creative and powerful ways to make social engineering attacks a lot more powerful and actually a lot more scalable.

So what I mean by that is that there’s the obvious one, which is, hey, we’re seeing that attackers are using AI to deep fake voices. So for example, in the latest Shiny Hunters attack, there were research where they found that attackers are using AI to actually impersonate individuals when they are impersonating to help that specifically when they are calling employees to actually get their, to get them to install a Salesforce app and whatnot.

That’s the obvious one, right? Like it’s like, yes, AI is really great at deep faking stuff. What the not obvious one that’s happening that’s actually impacting everybody is that attackers are also using AI to help them do research a lot better.

So I’ll give you an example. So in the Shiny Hunters attack, the way attackers, the way they actually found their targets is they looked for companies that use Salesforce and they looked for people who have Salesforce access. Now, you know, if I was doing this one by one, right before AI, it actually takes, it’s like a non-trivial amount of work for me to figure out who’s using Salesforce, right? There’s a lot of people that use Salesforce. There are a lot, right?

But for me to pinpoint down who exactly, which companies use Salesforce and which are the individuals who had this access, it takes a bit of work. Now with AI, that type of research is almost instant.It’s knowledge on tap, right?

So that’s kind of the example where you can assume that attackers are actually using AI to do this mass reconnaissance so that they can then figure out what is like the most targeted attack they can execute that’s going to give them the biggest return.

And the unfortunate part is that like this is something that you can’t stop attackers from using AI, right? They’re extremely motivated to do so, but it just means that all the attacks we’re receiving are going to be a lot more personalized and targeted. And it’s going to be a lot more, a lot more convincing for the recipients as well.

Erick Espinosa: I kind of connect that to like, I mean, companies now, even when they want to personalize information, I guess to target customers, you know what I mean?

Like a lot of the information is online and it’s just easy access. And, you know, AI could basically just collect that information and tell you a story about that person automatically. Is there any, I guess going off of that, is there anything I guess online that you would recommend that companies don’t kind of share?

Because like, how would they find that these companies are using specifically Salesforce?

I imagine that’s information easily, like readily available online, no?

Sanny Liao: That’s quite easily available. If I were attacker, it’s pretty easy to look up, hey, if a company is hiring for, for example, Salesforce developers, that’s a telltale sign, right? That they are using Salesforce and, you know, every company have to recruit.

So these jobs are publicly available.

So there’s, I think there’s some amount of risk, right, that we can mitigate…

Erick Espinosa: There’s always going to be some activity we can’t fully stop. Attackers will get access to certain information. One example you mentioned is the Shiny Hunters vishing attack. Am I saying that right?

Sanny Liao: Yes. The attack is still unfolding. It first came out in the news when Google, Adidas, Workday and a few others reported that attackers targeted sales teams with access to Salesforce. They used two tactics.

They called employees while pretending to be IT support and convinced them to install a malicious third-party app. That gave the attackers access to Salesforce, which exposed client emails, contact information and other data. The biggest impact was the loss of trust between companies and their clients.

The group also pretended to be HR and used that story to get employees to reveal personal information. That let the attackers bypass MFA and get deeper into Salesforce.

More recently, they compromised accounts belonging to Gainsight employees and inserted malware into a Gainsight Salesforce extension. Gainsight is widely used, so that created another wave of data loss.

Erick Espinosa: I imagine the employees felt violated. When you meet with companies, what should founders or employees watch for when they get calls or emails that feel off?

Sanny Liao: If someone calls claiming to be IT support or HR, don’t trust it. Almost no legitimate IT or HR team will call you out of the blue. If you’re unsure, hang up and call back using the number you already know belongs to your company. Treat every unexpected call with suspicion.

Erick Espinosa: What about Slack? Could attackers get in there by pretending to be someone from a vendor and message employees?

Sanny Liao: Yes. We’ve seen attacks happen over Slack, WhatsApp and other channels. Many international companies use WhatsApp heavily. Attackers follow whatever platform you use. If you’re on Slack, they’ll look for you there. If it’s Zoom or WhatsApp, they’ll try those too. Once companies get better at handling phone-based phishing, attackers shift to the next channel.

Erick Espinosa: The third threat you mentioned is vulnerabilities created by insecure AI usage. This one is common. Can you walk through the Shai-Hulud example and what insecure AI usage looks like?

Sanny Liao: Insecure AI usage is a major concern. Every company is adopting AI to stay competitive. The ones who adopt it quickly and correctly will have an edge. The problem is that rapid adoption creates new vulnerabilities that companies never had to deal with before.

The recent Shai-Hulud attack targeted NPM packages. Attackers compromised GitHub accounts of open source contributors and slipped malware into widely used packages. Most startups rely heavily on open source, so when they pulled the latest versions, they unknowingly pulled in malware.

This connects to AI because tools like Cursor and Claude Code make coding faster and more automated. If you rely heavily on AI to write or review code, you may not notice what packages are being added or how they behave. Without deliberate oversight, it’s easy to miss these risks.

Asana also reported a flaw in their MCP server. Tenants could accidentally access each other’s data. There’s no established playbook for building these AI-driven systems, so companies are learning as they go while moving fast. That creates new vulnerabilities by design.

Erick Espinosa: It reminds me of the crypto era. People rushed in, got sold products by people trying to make quick money and ended up exposing themselves. Now you see the same with AI tools that ask for deep access to company data. Have you seen cases where companies jumped into AI too fast and realized later they made themselves vulnerable?

Sanny Liao: Yes, and there’s no simple solution. It’s not just small third-party AI tools. Even large enterprise AI products ship with new vulnerabilities. Google launched its coding tool and a vulnerability was found the next day.

AI is new and everyone wants to move quickly, so issues are going to happen. There was a well-known case in 2023 when a Samsung developer uploaded the entire company codebase into a personal ChatGPT account. The personal version uses data for public training, so the code became publicly accessible. Samsung couldn’t get it back. That wasn’t an attacker. It was an unintentional mistake during early adoption.

Both intentional attacks and unintended misuse create real risks. Companies need AI to stay competitive, but the pace of adoption is amplifying vulnerabilities.

Erick Espinosa: I think you’re also a great example of how AI is creating new jobs. While there are attackers, there are also new opportunities in this field. Security roles have always been needed, but now people see the value more clearly. For anyone interested in getting into this space, it feels like a career that isn’t going away anytime soon.

Sanny Liao: Absolutely. This is a really exciting time, especially for people who are just entering the workforce. No one has a degree in how to use the latest AI tools. It’s a blank slate. You have the chance to learn cutting-edge tools, come up with creative ways to apply them, and create value for a business. It’s a great place to be.

Erick Espinosa: I agree. Sanny, thank you for your insight and for breaking this down. You gave our listeners a clear view of how attackers are stepping up their game. For anyone who wants to learn more or follow your work, what’s the best way to find you online?

Sanny Liao: You can find our company at fablesecurity.com. I’m also on LinkedIn. Feel free to connect with me. I enjoy talking about cybersecurity and the latest AI threats. Always happy to have those conversations.

Erick Espinosa: And we loved hearing it. Thank you so much.

Sanny Liao: Thank you.