DARPA has sought to monitor, predict & modify human behavior with massive data collection & analyses for decades: perspective

DARPA is looking to predict, incentivize, and deter the future behaviors of the Pentagon’s adversaries by developing an algorithmic “theory of mind.”

“The goal of an upcoming program will be to develop an algorithmic theory of mind to model adversaries’ situational awareness and predict future behavior”

DARPA, “Theory of Mind” Special Notice, December 2024

The US Defense Advanced Research Projects Agency (DARPA) is putting together a research program called “Theory of Mind” with the goal of developing “new capabilities to enable national security decisionmakers to optimize strategies for deterring or incentivizing actions by adversaries,” according to a very brief special announcement.

DARPA says, “The program will seek to combine algorithms with human expertise to explore, in a modeling and simulation environment, potential courses of action in national security scenarios with far greater breadth and efficiency than is currently possible.

“This would provide decisionmakers with more options for incentive frameworks while preventing unwanted escalation.”

“The program will seek not only to understand an actor’s current strategy but also to find a decomposed version of the strategy into relevant basis vectors to track strategy changes under non-stationary assumptions”

DARPA, “Theory of Mind” Special Notice, December 2024

The author of the Theory of Mind special notice is Eric Davis, who joined DARPA in February, 2024.

Previously, Davis was the principal scientist of artificial intelligence, machine learning, and human-machine teaming at Galois, a tech R&D company whose clients include DARPA, the US Intelligence Community, and NASA, and whose partners include the Bill and Melinda Gates Foundation.

The Theory of Mind special notice does not mention who the adversaries are, but once such an algorithm is developed, there’d be no putting this genie back in the bottle.

The 2017 edition of the “DoD Dictionary of Military and Associated Terms” defines “adversary” as “a party acknowledged as potentially hostile to a friendly party and against which the use of force may be envisaged.”

The dictionary does not define the word “party,” so in this vague concept, an adversary could be anyone perceived to be “potentially hostile” and therefore worthy of the use of force.

Going back decades, DARPA has sought to monitor, predict, and modify human behavior by collecting and analyzing as much information on people as possible.

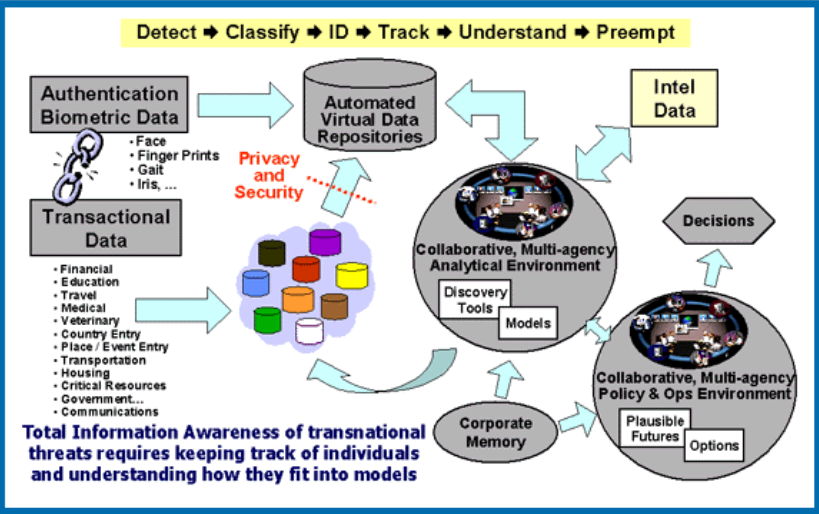

“The goal of the Total Information Awareness (TIA) program is to revolutionize the ability of the United States to detect, classify and identify foreign terrorists – and decipher their plans – and thereby enable the US to take timely action to successfully preempt and defeat terrorist acts”

DARPA, Total Information Awareness (TIA), July 2002

Following the attacks on September 11, 2001 DARPA announced its now-defunct (or potentially splintered) “Total Information Awareness (TIA) program in July, 2002 “to revolutionize the ability of the United States to detect, classify and identify foreign terrorists – and decipher their plans – and thereby enable the US to take timely action to successfully preempt and defeat terrorist acts.”

Investigative journalist, author, and contributing editor at Unlimited Hangout Whitney Webb described TIA as a “precrime approach to combatting terrorism” with the purpose of developing “an ‘all-seeing’ military-surveillance apparatus.”

In 2003, the American Civil Liberties Union (ACLU) described TIA as possibly being “the closest thing to a true ‘Big Brother’ program that has ever been seriously contemplated in the United States.”

According to the Electronic Privacy Information Center (EPIC), “A key component of the TIA project was to develop data-mining or knowledge discovery tools that would sort through the massive amounts of information to find patterns and associations.”

DARPA’s latest Theory Of Mind program parallels aspects of Total Information Awareness; however, there is currently very little information about the former.

Another DARPA project that had a similar goal of creating a massive database of human behavior was the LifeLog program, which was announced on August 4, 2003 and was reportedly scrapped on February 4, 2004 — the very same day that TheFacebook was launched.

“To build a cognitive computing system, a user must store, retrieve, and understand data about his or her past experiences. This entails collecting diverse data, understanding how to describe the data, learning which data and what relationships among them are important, and extracting useful information”

DARPA, LifeLog, August 2003

According to an archived version of DARPA’s LifeLog program description, one can find parallels between LifeLog, TIA, and Facebook, especially as they relate to individuals capturing and analyzing their own experiences:

“LifeLog is one part of DARPA’s research in cognitive computing […] This new generation of cognitive computers will understand their users and help them manage their affairs more effectively.

“The research is designed to extend the model of a personal digital assistant (PDA) to one that might eventually become a personal digital partner.

“LifeLog is a program that steps towards that goal. The LifeLog Program addresses a targeted and very difficult problem: how individuals might capture and analyze their own experiences, preferences and goals.

“The LifeLog capability would provide an electronic diary to help the individual more accurately recall and use his or her past experiences to be more effective in current or future tasks.”

When Wired reported that the Pentagon killed the program in February 2004, the authors wrote that “LifeLog aimed to gather in a single place just about everything an individual says, sees or does: the phone calls made, the TV shows watched, the magazines read, the plane tickets bought, the e-mail sent and received.”

Does this not sound like what Facebook and so many other social media companies would later accomplish commercially?

“DARPA is interested in developing new capabilities to enable national security decisionmakers to optimize strategies for deterring or incentivizing actions by adversaries”

DARPA, “Theory of Mind” Special Notice, December 2024

For good or ill, DARPA’s Theory of Mind program appears to be the latest incarnation of a decades-long pursuit to know everything about an individual or group in order to predict and modify human behavior.

LifeLog was billed as personal assistant tool to help warfighters keep a record of all their activities so they could be used for their own personal development while Total Information Awareness was said to be aimed at pre-empting terrorist activities.

Both programs required massive data collection. Both sought to change behaviors for different future outcomes.

Now, Theory of Mind is geared towards creating an algorithm to understand and thwart the plans of whomever they consider to be “adversaries.”

If successful, how many different applications could be derived from this type of precrime technology?

Could the Theory of Mind algorithm ever be used by law enforcement? How about commercial entities for business purposes?

Of course, research programs with similar goals already exist, and comparable commercial products are plentiful.

The difference now is the greater speed and efficiency at which these tools, tactics, and technologies are developed, how they can be combined to complement one another, and the purposes for which public and private entities intend to deploy them.

Image Source: Image by freepik