As Facebook unveils its new policy on deepfakes, will the social media giant be able to handle a joke and know which videos are meant to be in jest as opposed to ones with nefarious intent?

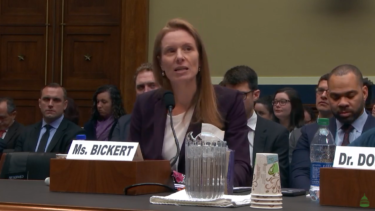

Facebook’s counterterrorism chief is appearing before Congress today to talk about digital deception just two days after announcing Facebook’s new policy on deepfakes, but will Facebook be able to distinguish between what’s parody and what’s coordinated deception?

“We will remove videos that have been edited or synthesized using artificial intelligence or deep learning techniques in ways that are not apparent to an average person”

Today the US House Subcommittee on Consumer Protection and Commerce held a hearing called “Americans at Risk: Manipulation and Deception in the Digital Age.”

A major topic of discussion of Wednesday’s hearing centered around how to combat the spread of deepfakes and manipulated media on the internet.

In her testimony, Facebook’s counterterrorism chief Monika Bickert explained, “Manipulated media can be made with simple technology like Photoshop, or with sophisticated tools that use artificial intelligence or ‘deep learning’ techniques to create videos that distort reality—usually called ‘deepfakes.’

“While these videos are still relatively rare on the internet, they present a significant challenge for our industry and society as their use increases, and we have been engaging broadly with internal and external stakeholders to better understand and address this issue.

“That is why we just announced a new policy that we will remove certain types of misleading manipulated media from our platform.”

“This policy does not extend to content that is parody or satire”

Monika Bickert at Wednesday’s hearing

The new Facebook policy on deepfakes and manipulated media was announced by Bickert herself on Monday.

Facebook will remove misleading manipulated media if it meets the following criteria:

- It has been edited or synthesized – beyond adjustments for clarity or quality – in ways that aren’t apparent to an average person and would likely mislead someone into thinking that a subject of the video said words that they did not actually say.

- And it is the product of artificial intelligence or machine learning that merges, replaces or superimposes content onto a video, making it appear to be authentic.

“We will remove videos that have been edited or synthesized using artificial intelligence or deep learning techniques in ways that are not apparent to an average person and that would mislead an average person to believe that a subject of the video said words that they did not say,” Bickert testified.

At first glance the policy looks like Facebook is attempting to stop disinformation from spreading, but at a second glance, it looks like there is huge potential for censorship because it leaves a lot of room for interpretation.

However, “This policy does not extend to content that is parody or satire, or video that has been edited solely to omit or change the order of words,” Bickert wrote on the Facebook blog.

My question is, “How does Facebook determine the line between what is considered parody or satire and what is considered disinformation?”

In other words, can Facebook take a joke or will it also remove content that “brushes up” against its policies like Google-owned YouTube does?

How will Facebook navigate the slippery slope of deepfakes that may or may not have political motives? Remember what happened during the controversial “Drunk Pelosi” cheap fake?

Shadowbanning is also an option. In fact, Facebook holds the patent on shadowbanning tactics.

Witnesses at Wednesday’s hearing included:

- Monika Bickert:

- Facebook’s head of product policy and counterterrorism

- Aspen Cybersecurity Group member

- Former resident legal advisor at the US Embassy in Bangkok

- Specialist in response to child exploitation and human trafficking

- Tristan Harris:

- Co-Founder of the Center for Humane Technology

- Former Google Design ethicist

- Called the “closest thing Silicon Valley has to a conscience,” by The Atlantic

- Joan Donovan:

- Director of the Technology and Social Change Research Project at Harvard Kennedy School’s Shorenstein Center on Media Politics and Public Policy

- Former Research Lead for Data & Society’s Media Manipulation Initiative

- Justin (Gus) Hurwitz:

- Co-Director of Space, Cyber, and Telecom Law Program, University of Nebraska College of Law

- Director for Law and Economics Programming with the International Center for Law & Economics

- Worked at Los Alamos National Lab and interned at the Naval Research Lab

This article was written while the hearing was still in progress.