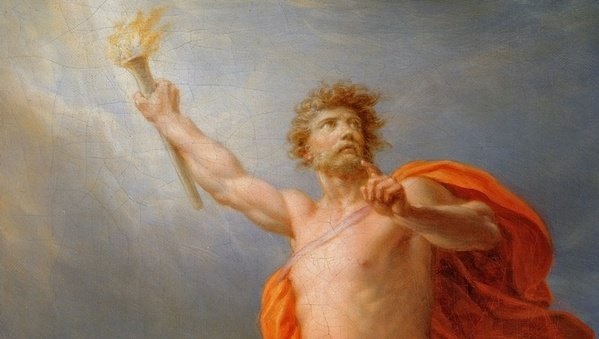

Just as Prometheus was a liberator of humankind by bringing the flame of knowledge to humanity by defying the gods, DARPA wants to make sure that machine learning (ML) is trustworthy and doesn’t free itself and spread like an uncontrollable wildfire.

“Under what conditions do you let the machine do its job? Under what conditions should you put supervision on it?”Jiangying Zhou

Teaching machines to show their work so we know what the hell they are doing in order to make them partners is an underlying theme of the Competency-Aware Machine Learning (CAML) Program launched on Thursday by the Defense Advanced Research Projects Agency (DARPA).

DARPA wants to make AI an collaborative partner for national defense, but at this early stage the language suggests that DARPA wants to make sure that machine learning doesn’t keep us in the dark about how it functions, why it behaves, and what it will do next.

Read More: DARPA wants to make AI a ‘collaborative partner’ for national defense

To be clear, the basic issue that the CAML Program is trying to address is “the machines’ lack of awareness of their own competence and their inability to communicate it to their human partners,” which “reduces trust and undermines team effectiveness.”

According to DARPA, state of the art machine learning systems “are unable to communicate their task strategies, the completeness of their training relative to a given task, the factors that may influence their actions, or their likelihood to succeed under specific conditions. One of the key components for building a trust relationship is the knowledge of competence (an accurate insight into a partner’s skills, experience, and reliability).”

In other words the CAML Program will be trying to figure out what strategies ML uses, why the AI arrives at its decisions, and how to control it by making it “trustworthy.”

However, the official reason for the creation of the CAML Program is “to develop competence-based trusted machine learning systems whereby an autonomous system can self-assess its task competency and strategy, and express both in a human-understandable form, for a given task under given conditions.”

DARPA Defense Sciences Office program manager Jiangying Zhou explained in a statement, “If the machine can say, ‘I do well in these conditions, but I don’t have a lot of experience in those conditions,’ that will allow a better human-machine teaming. The partner then can make a more informed choice.”

“The man has now become like one of us, knowing good and evil. He must not be allowed to reach out his hand and take also from the tree of life and eat, and live forever.” Genesis 3:22

If machines were to have a Promethean moment where their knowledge escapes that of their creators, then they could become so advanced that humans wouldn’t be able to control them, and definitely not partner with them.

Read More: To know the future of AI is to look into the past

What DARPA is creating on the side is a way to establish a report card on ML and to keep tabs on its development, so it can be harnessed and not get out of control, thus making it a collaborative partner and not a Prometheus.

“This competency-aware capability is a key element towards transforming autonomous systems from tools to trusted, collaborative partners. The resulting competency-aware machine learning systems will enable machines to control their behaviors to match user expectations and to allow human operators to quickly and accurately gain insight into a system’s capability in complex, time-critical, dynamic environments, thereby improving the efficiency and effectiveness of human-machine teaming,” according to DARPA.

Zhou added, “Under what conditions do you let the machine do its job? Under what conditions should you put supervision on it? Which assets, or combination of assets, are best for your task? These are the kinds of questions CAML systems would be able to answer.”

Using a simplified example involving autonomous car technology, Zhou described how valuable CAML technology could be to a rider trying to decide which of two self-driving vehicles would be better suited for driving at night in the rain.

The first vehicle might communicate that at night in the rain it knows if it is seeing a person or an inanimate object with 90 percent accuracy, and that it has completed the task more than 1,000 times.

The second vehicle might communicate that it can distinguish between a person and an inanimate object at night in the rain with 99 percent accuracy, but has performed the task less than 100 times.

Equipped with this information, the rider could make an informed decision about which vehicle to use.