Big data has been the preserve of the largest tech companies, but synthetic data may be about to level the playing field.

https://www.youtube.com/watch?v=42QZggpb–M

The advent of synthetic data is upon us with implications for a variety of industries as well as applications for government intelligence agencies.

On March 12, 2019 the venture capital arm of the CIA, In-Q-Tel (IQT), formed a strategic partnership and investment with AI.Reverie, a leading provider of computer vision algorithms leveraging its proprietary synthetic data platform.

Read More: CIA-backed, NSA-approved Pokemon GO users give away all privacy rights

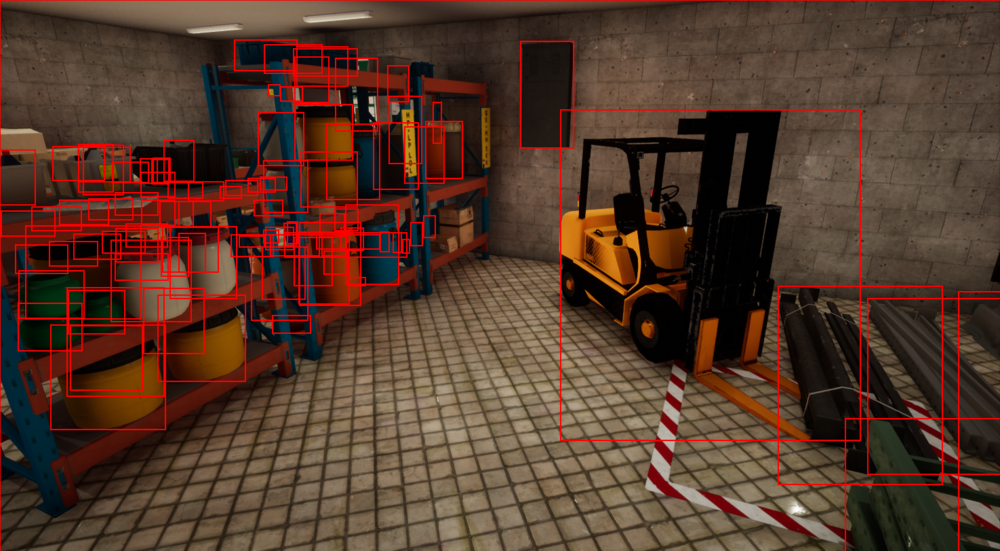

US government agencies, along with the military, are looking to AI.Reverie because the company can produce photorealistic virtual worlds that closely mimic any true location where clients’ services are used.

Why would intelligence agencies be interested in synthetic data? Let us take a look at what synthetic data is, and then we’ll explore its use cases.

What is Synthetic Data?

Synthetic data is information which is manufactured artificially rather than data generated from real world events. It is used to model and validate production, operational and mathematical datasets and in the training of machine learning models. In layman’s terms, it’s computer generated data which mimics real world data.

Read More: Nature is intelligent: Pentagon looks to insects for AI biomimicry design

This artificial dataset then aids a computer or artificial intelligence (AI) neural network in how to respond to specific criteria or situations in the absence of or as a replacement for real world data.

What are its Use Cases?

Santa Clara-based Nvidia is using synthetic data in Nvidia Constellation – its cloud-based autonomous simulation platform. David Nister, VP of Autonomous Driving Software at Nvidia explained to VentureBeat that “by removing human error from the driving equation, we can prevent the vast majority of collisions and minimize the impact of those that do occur”.

Synthetic data is implicated in achieving this. Its software follows the core principal of collision avoidance rather than a range of preset rules. Calculations are performed on-vehicle, frame by frame. Both real world and synthetic data has been used to validate the data used within the collision avoidance system which has been based on high risk highway and urban driving scenarios.

The vast majority of car crashes are caused by human error. However, whilst issues to date with autonomous driving systems have been few and far between, they have been highly publicized. For that reason, the work that Nvidia is doing is important in reducing what is already a lower risk further still. Synthetic data is playing a significant role in that process. Additionally, it’s speeding up the modelling process.

Raman Mehta, CIO at automotive company, Visteon, suggests that not a lot of labelled data is required whilst at the same time, the learning curve of models is speeded up when using synthetic data.

Synthetic Data Market

Companies such as Facebook have made billions off the back of data. That market is likely to undergo considerable change in the years ahead with people being empowered to take ownership of their own data. However, there is also a market for synthetic data.

Read More: Are you really buying Facebook’s privacy-focused vision? Op-ed

So long as companies embrace regulatory compliance related to synthetic data, then there is every reason to believe that it will be a valuable commodity for use on a commercial basis by enterprises.

In fact, it’s in consideration of the rise in the assertion of data privacy that synthetic data may become of more importance and relevance. In the UK, synthetic data is being used for the first time by Health Data Insights in the health sector.

Marketed as Simulacrum, the company partnered with British pharmaceutical giant AstraZeneca and the U.S. health information technology company, IQVIA, to produce a data pool of synthetic cancer data.

Read More: US intelligence researching DNA for exabytes of data storage

This artificial data is modeled on actual patient data collected by the National Cancer Registration and Analysis Service (NCRAS), a part of the UK’s National Health Service (NHS).

On a pharmaphorum webinar last November, Jem Rashbass, Medical Director at Health Data Insight, explained the company’s product offering as it pertains to synthetic data:

“We set out to create a dataset that had the look and feel and the same structure as the data held by Public Health England but was in fact entirely synthetic – and that’s what the Simulacrum is.”

Prediction models that rely on AI are also leaning on synthetic data to train the AI. A team working under Juliet Biggs, a volcanologist at Bristol University in England, is using AI to produce a model that can predict volcanic eruptions. It’s training its neural network on data generated synthetically from simulated eruptions.

Data Democracy but not Without its Pitfalls

As highlighted above, use cases are emerging for the technology. It’s democratizing in that it allows tech startups to compete with tech giants such as Google and Facebook who have had ongoing access to the largest of datasets. Using synthetic data also means cost savings by comparison with the collection of real world data.

Read More: Facebook disguised sponsored ads as regular posts, Adblock Plus fixed that

With the integrity of personal data usage now being a contentious subject, such data can be anonymized – stripped of all personal information – and then used as synthetic data. This is likely to become a solution for many companies and research organizations as they seek to progress their projects whilst adhering to increasing standards and regulations in the area of personal data.

However, no new technology is without its limitations. Whilst artificial data may mimic the properties of corresponding real world data, it’s not a direct copy. Therefore, the subtleties of the dataset are not likely to be the same. This may hamper the capability of synthetic data in system training situations, negatively impacting on its output accuracy.

Added to that, the quality of synthetic data is largely dependent upon the efficacy of the model that was used to produce it. Another step is also required with the use of a verification server as an intermediate step to interrogate the initial data.

Synthetic data will clearly have a part to play in research and development, modelling and the training of AI neural networks. It is an excellent source of cheap data. However, it is not a replacement for human interpreted real world data. Both will still be relevant as machine learning and the machine to machine (M2M) economy develop.