Congress hears testimony on who should determine and deal with disinformation; with one witness suggesting to let the people decide while others say that government should either work with or against big tech.

Witnesses in Wednesday’s congressional hearing “A Growing Threat: How Disinformation Damages American Democracy” unanimously agreed that there should be no federal disinformation governance board.

The five witnesses also agreed that news should not be pre-screened for truth by any government entity before being broadcast.

But when it came to actual recommendations regarding what should be done about disinformation, particularly regarding elections, their answers varied.

“Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances” — First Amendment to the Constitution of the United States of America

For combating disinformation, four witnesses (independent of each other) recommended:

- That citizens should rely on the word of politicians to tell the truth

- That the federal government should rely on its existing authorities regarding harmful practices like threats to election officials

- That the federal government should work in tandem with social media companies

- That the federal government take legislative action against big tech business practices

- That more money and resources are given to protect election officials

- That researchers should have more access to social media data

One witness, Institute for Free Speech Senior Fellow Gary Lawkowski, expressed the ideas that more good speech beats bad speech and that citizens should decide for themselves what is and isn’t disinformation.

“I think when presented with all the information, they [the American people] can choose for themselves, and I think that’s the way it’s supposed to work in a democratic society” — Gary Lawkowski

For the free speech advocate, “the fundamental problem with regulating disinformation is ‘who’ decides.”

“I believe the American people should make that decision,” Lawkowski testified, adding, “I think when presented with all the information, they can choose for themselves, and I think that’s the way it’s supposed to work in a democratic society,” Lawkowski testified.

“The remedy for bad speech is good speech because sometimes the conventional wisdom is wrong, and sometimes the unconventional wisdom is right,” he added later.

“Positioning government as an arbiter of truth, is far more dangerous to the long-term health of American democracy” — Gary Lawkowski

“More speech,” according to Lawkowski’s written testimony, “allows true speech to outshine false statements in a marketplace of ideas.”

“As distasteful as that may be at times, the alternative, positioning government as an arbiter of truth, is far more dangerous to the long-term health of American democracy.”

Among those dangers is “the risk that government employees will simply declare inconvenient, embarrassing, or incriminating information to be ‘disinformation’ and seek to strangle valid criticisms in the crib.”

“The government is supposed to be the servant of the people; it’s not supposed to be its master. It’s supposed to take its direction from ‘we, the people’ — not direct ‘we, the people,'” he added.

“Social media companies are subject to the same temptations and fallibilities as anyone else. They should not be in the business of determining what’s true and what’s false” — Gary Lakowski

When asked if social media companies should determine what is or isn’t disinformation, Lawkowski replied:

“Social media companies are subject to the same temptations and fallibilities as anyone else. They should not be in the business of determining what’s true and what’s false.”

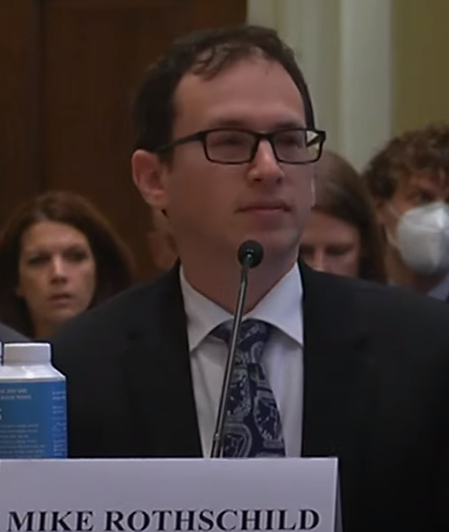

“I think that there should be synergy between social media companies and between the government” — Mike Rothschild

In his opening statement, journalist and author of “The World’s Worst Conspiracy Theories” Mike Rothschild said that disinformation wasn’t just a viral tweet, but that it was a “grave danger to our democratic process.”

“If government, private industry, and the American electorate don’t agree to address the danger it [disinformation] represents, it could send America down a dark path that will be very difficult to come back from,” Rothschild testified.

While not in favor of a federal disinformation governance board, the author recommended that “there should be synergy between social media companies and between the government,” adding, “I don’t think one should rule over the other, but I think they should work in tandem.”

“More professionals and academics who study disinformation are embracing the idea of ‘pre-bunking’ to inoculate readers against conspiracy theories before they become popular” — Mike Rothschild

In his written testimony, Rothschild pondered what could be done to stop disinformation, and he listed several examples of what had already been tried, and their mixed levels of effectiveness, including:

- Fact checking: “Fact checking matters because without challenging conspiracy theories, they grow and spread to new audiences […] If they aren’t confronted, there’s nothing to tell people they aren’t true.”

- Pre-bunking narratives: “More professionals and academics who study disinformation are embracing the idea of ‘pre-bunking’ to inoculate readers against conspiracy theories before they become popular.”

- Social media censorship: “Social media crackdowns can also help stop the thread of hoaxes and disinformation, though many major sites have been reluctant to deplatform major conspiracy theory promoters for fear of looking biased against conservatives.”

- Relying on influencers: “Ultimately, it’s up to trusted figures in the communities targeted by disinformation to reassure people that American elections are free and fair, that mail-in voting is safe, that their vote will count, and that if their candidate loses, it’s not because of fraud or a cabal of conspirators.”

“If government, private industry, and the American electorate don’t agree to address the danger it [disinformation] represents, it could send America down a dark path that will be very difficult to come back from” — Mike Rothschild

When asked if social media companies should determine which posts are true or are disinformation, Rothschild responded:

“I think that they can warn users on known disinformation or lies.”

“I believe that we have to rely on elected officials and others to be truthtellers” — Lisa Deeley

Philadelphia City Commissioners’ Chairwoman Lisa Deeley recommended that young people should be taught to grow up knowing what is true or false, but for now people should rely on elected officials and other “truthtellers.”

“I believe that we have to rely on elected officials and others to be truthtellers, so when elected officials speak, people listen, and we have a responsibility to speak truth and to fight back the disinformation,” Deeley testified.

“[Social media companies] have to get the facts from truthtellers” — Lisa Deeley

According to the elected official, social media companies should get their facts from elected officials and other truthtellers.

When asked if social media platforms should determine what is and isn’t disinformation, Deeley replied:

“I think they [social media companies] should base their answers on factual information […] They have to get the facts from truthtellers.”

In her written testimony, Deeley added, “People posting and sharing videos on Facebook and Twitter, even those who title themselves as experts, may not be the best place to gather your facts.”

“We need a comprehensive set of legislative, regulatory, and corporate accountability reforms to reduce the harmful impacts of disinformation” — Yosef Getachew

In his opening statement, Media and Democracy Program Director at Common Cause Yosef Getachew recommended that Congress take legislative action against social media business practices.

“We need a comprehensive set of legislative, regulatory, and corporate accountability reforms to reduce the harmful impacts of disinformation,” Getachew testified.

“Many of these reforms involve reining in social media platforms, whose business practices have incentivized the proliferation of harmful content.”

His reform suggestions include:

- Comprehensive privacy legislation

- Giving researchers more access to social media data

- Supporting local journalism

In his written testimony, Getachew added that “Congress must pass reforms that mitigate the harmful business practices of social media platforms.”

“Congress, federal agencies, and all political leaders must do more to address the harms of election disinformation and support the election workers” — Edgardo Cortes

Focusing on the safety of election workers, election security adviser at NYU School of Law’s Brennan Center for Justice Edgardo Cortes recommended that more support, funding, and resources were needed to address internal and external threats.

“Congress, federal agencies, and all political leaders must do more to address the harms of election disinformation and support the election workers who have borne the brunt of the impact,” said Cortes in his written testimony.

“Specifically, federal leaders must emphasize accurate information about elections, provide greater protections for election workers, and direct more funding and resources to help election offices address internal and external threats.”

The hearing ran just a little over an hour, and less than half of that time was dedicated to questioning the witnesses.

According to the First Amendment to Constitution of the United States of America, “Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances.”