Pessimistic Archive is a newsletter based around clippings from old newspapers where past generations express their concerns about the future and new technologies.

In 1859, French poet Charles Baudelaire described photography as*“the refuge of every would-be painter, every painter too ill-endowed or too lazy to complete his studies”* and to embrace it was a sign of “blindness” and “imbecility.”

In 1906, a writer called love letters written with a typewriter the most “cold-blooded, mechanical, unromantic production imaginable”, while another writer said that “The girl who will put up with a typewritten love letter will put up with anything.”

In the past century, attention-grabbing newspaper headlines warned against reading in bed, the evils of roller-skating, “bicycle face” which was attributed to “the nervous strain put upon the rider, in keeping his balance”, and the American Society of Composers, Authors and Publishers proved with charts how “the talking pictures, radio and the phonograph” had “murdered music.”

Fast forward to 2023, we have so-called artificial intelligence. It can seamlessly and within seconds generate text, images, videos, and music from text prompts. Many artists are concerned.

Three independent visual artists – Sarah Andersen, Kelly Mckernan, and Karla Ortiz – have gone so far as filing a class-action lawsuit against Stable Diffusion with help from lawyer Matthew Butterick and litigators from Joseph Saveri Law Firm.

Matthew Butterick is already a known figure in “the legal copyright battle against AI” from another class-action lawsuit against Github’s CoPilot, a generative AI tool for code generation.

The Class-Action Lawsuit

In my latest post, I wrote about the open-source AI image generator, Stable Diffusion. The class-action lawsuit is targeted against the owner of Stable Diffusion, Stability AI, another famous AI image company, Midjourney, and the online art community, DevianArt.

However, the plaintiff’s complaint is formulated in a broad scope and is essentially taking on every generative AI model trained on copyrighted data – which all the big ones are, and in gigantic amounts.

In effect, if the San Francisco federal court decides to hear the case on the 19th of July, in spite of the defendants’ motions to dismiss, the court’s decision could massively impact a multi-trillion-dollar industry.

Overall, the trio of artists behind the class-action lawsuit is trying to impose a “yes” to two difficult copyright questions generative AI models give rise to – one relating to inputs and one relating to outputs:

- The input question: Should developers obtain permission and/or pay a license to right holders for using their copyrighted material in the training process of an AI?

- The output question: If a generative AI product generates output that looks similar to a piece of work created by a human artist, can the right holder make infringement claims against the provider?

I am not an expert in US copyright law, just an observer with a neutral stance on the questions. Based on my research, I believe the answer to the first question is “no”, while the second question is more problematic to answer, and may depend on a case-by-case assessment.

I highly doubt that this class-action lawsuit will provide us with any answers.

Another pending copyright case concerning Stable Diffusion’s use and distribution of images was filed by the stock image behemoth Getty Images in February of this year.

The lawsuit from Getty Images in my view stands a much better chance of going to court and contributing to the legal understanding of copyrights vs. generative AI.

The main difference in one sentence: the Getty Images lawsuit is better documented. Getty Images can prove their rights and point to specific infringements of their rights, whereas the artists behind the class-action lawsuit cannot.

The artists’ class-action complaint is unfortunately riddled with rudimentary mistakes and wrong assumptions about how Stable Diffusion was trained and how the model generates images.

A group of tech enthusiasts has created a website http://www.stablediffusionfrivolous.com/ where they point to some of the technical inaccuracies in the complaint.

Here, I will focus on how the artists address, or rather fail to address, the two legal questions as stated above.

The Input Question

Here is a quote from the complaint (¶57-58), where the artists offer their views on the input question:

“Stability scraped, and thereby copied over five billion images from websites as the Training Images used as training data for Stable Diffusion.

Stability did not seek consent from either the creators of the Training Images or the websites that hosted them from which they were scraped.

Stability did not attempt to negotiate licenses for any of the Training Images. Stability simply took them. Stability has embedded and stored compressed copies of the Training Images within Stable Diffusion.”

The first version of Stable Diffusion was trained with “CLIP-filtered” image-text pairs from the public database LAION-5B.

LAION-5B contains information about 5.85 billion images and is the largest database of its kind. It was developed by the German non-profit organization LAION (an acronym for Large-scale Artificial Intelligence Open Network), and Stability AI helped to fund its development.

It’s important to notice that there are not any actual images stored in LAION-5B. Instead, information about each image is stored and consists of:

- An URL link to the image’s website

- A short text description of what the image depicts

- Height and width of the image

- The perceived similarity to other images

- a probability score of how likely it is that the image is “unsafe” (pornographic/NSFW)

- a probability score of how likely it is that the image has a watermark

The artists’ claim that Stable Diffusion “stores compressed copies” of their art is therefore a misnomer. In actuality, Stable Diffusion’s training dataset consists of metadata about some of the artists’ images, and that metadata is not in itself copyright protected.

In the same way a song on Spotify is copyright-protected, but metadata about it such as artist name, song title, producer, release date, genre, and track duration is not. That is because retrieving this data is a purely mechanical process that does not require any creative efforts.

As a public dataset, LAION-5B can be examined by anyone interested. The company Spawning has created a search tool haveibeentrained.com where people can search through LAION-5B to see if their images are included in the dataset.

This is what the three artists, Sarah Andersen, Kelly McKernan, and Karla Ortiz did, and they found respectively more than 200, more than 30, and more than 12 representations of their work.

Specifically, Stable Diffusion was initially trained with 2.3 billion images from a subset of LAION-5B called LAION-2B-EN that only contains images with text descriptions in English.

Considering the size of Stable Diffusions training data, the unwitting contributions made by the three artists are small drops in a vast ocean.

In comparison, the Getty Images lawsuit against Stability AI concerned more than 12 million photographs from their collection which is still a minuscule part of the entire dataset.

Of all the artists’ works, only 16 images have been registered with the US copyright office by Sarah Andersen.

It follows from 17 U.S.C. § 411(a), that “no civil action for infringement of the copyright in any United States work shall be instituted until preregistration or registration of the copyright claim has been made (..)”.

In other words, if a work is not registered with the US copyright office, the right holder can generally not make infringement claims in a civil lawsuit. This means that the artists can only make claims on behalf of the 16 works owned and registered by Sarah Andersen.

If only the artists could prove that Stable Diffusion can sometimes generate outputs that resemble any of these 16 images, the artists could perhaps make a case concerning “the output question.” But as we shall see, they are unable to do so.

The Output Question

In regards to the output question, the artists suggest that every output Stable Diffusion generates is essentially derived from its training data and thereby copyright infringing (see ¶94-95). This legal theory is extremely far-fetched.

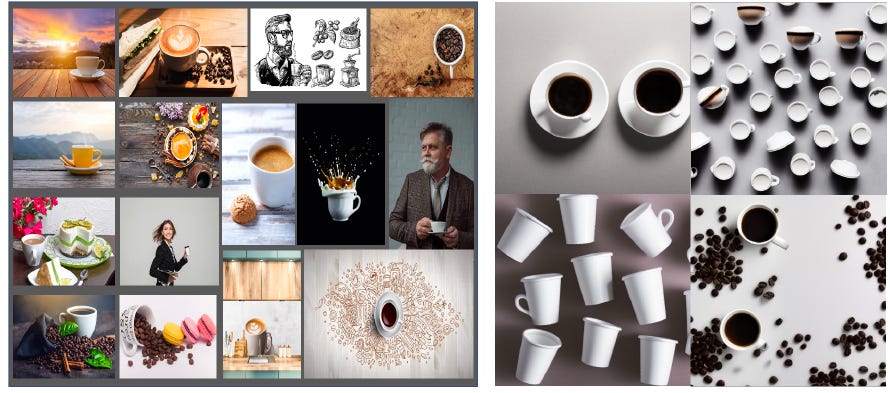

Below is an illustration from law professor Matthew Sag’s paper “Copyright Safety for Generative AI.” The 15 images to the left are from Stable Diffusions training data with the tags “white”, “coffee” and “cup.”

The images to the right were generated by Stable Diffusion with the text prompt “coffee cups on white backgrounds.” According to the artists’ logic, all of the images to the right would infringe on the copyright of the images to the left.

Although the images do clearly not look substantially similar.

Under certain rare conditions, it has been proven that Stable Diffusion in fact can generate output images that look very similar to images from its training dataset.

This is especially likely to happen when the input training image is widely distributed on the internet and reoccurs over and over again in Stable Diffusion’s training data.

In a recent paper titled Extracting Training Data from Diffusion Models, Nicholas Carlini and his co-authors identified 350.000 of the most duplicated images in Stable Diffusions training data.

Hereafter, they generated 500 new images via Stable Diffusion with text prompts identical to the text descriptions associated with each of the training data images.

As it turned out, out of the 175 million images (350.000*500), only 109 of the images (0.03%) could reasonably be considered “near-copies.”

Thereby, copyright infringements can happen, but the artists do not bring up any examples of how Stable Diffusion has copied their work. On the contrary, they write in the complaint ¶ 93:

“In general, none of the Stable Diffusion output images provided in response to a particular Text Prompt is likely to be a close match for any specific image in the training data.”

The artists do claim that Stable Diffusion is able to mimic their personal artistic styles. Normally, an “artistic style” cannot be subject to copyright protection. Infringement claims always have to be tied to infringements of specific works.

However, there is a legitimate issue here that has received a fair amount of public attention. Generative AI models can copy the distinctive styles of famous artists in seconds, indefinitely, and with close to zero costs.

To solve this problem, Stability AI removed the names of famous artists from labels in their dataset as a part of a November upgrade last year. This means that Stable Diffusion can no longer mimic people’s artistic styles.

If you, for example, ask Stable Diffusion to create an image in the style of Picasso or Rembrandt it is no longer able to do so. The change was initiated two months prior to the class-action complaint.

Overall, it is unclear how and why the artists believe Stable Diffusion copies their work. The artists seem more concerned with how Stable Diffusion might be able to threaten their jobs in the future, and less concerned with how Stable Diffusion actually works now.

One of the three artists, Sarah Andersen, wrote in a NY Times article from December last year:

“I’ve been playing around with several generators, and so far none have mimicked my style in a way that can directly threaten my career, a fact that will almost certainly change as A.I. continues to improve.”

Below are two illustrations from the article, one by Sarah Andersen and one by Stable Diffusion. You can probably guess which one was created by who.

Closing Thought

In December 2022, Stability AI announced that they had partnered up with Spawning, the company behind haveibeentrained.com, and would now provide artists with the option to either opt in or opt out of having their works used as training material for the next version of Stable Diffusion.

Although the initiative may not be perfect, it could be considered a step in the right direction for any artist who is concerned about feeding their work to large foundation models.

Prior to the class-action lawsuit, Karla Ortiz talked to MIT Technology Review about the new opt-out function, and she did not think Stability AI went far enough:

“The only thing that Stability.AI can do is algorithmic disgorgement where they completely destroy their database and they completely destroy all models that have all of our data in it”

This statement is very telling. The three artists behind the class-action law, along with Matthew Butterick and the rest of their legal representation, pretends to stand up for the rights of the artist, but they are in fact modern-day Luddites.

This article was originally published by Futuristic Lawyer on Hackernoon.