Apple’s research paper on Artificial Intelligence (AI) focuses heavily on studying facial and hand expressions, with hints of mapping human emotions.

The highly-technical paper entitled “Learning from Simulated and Unsupervised Images through Adversarial Training,” documents Apple’s first research paper into the field of AI with a focus on human expression.

Among the researchers who authored the paper is Apple’s Deep Learning Scientist, Josh Susskind, whose entire academic and professional career has been focused on the fascinating research and development into human behavior, machine learning, and facial expression recognition.

Dr. Susskind also co-founded Emotient, “the leader in emotion detection and sentiment analysis based on facial expressions,” before the company was acquired by Apple in January, 2016.

Additionally, Susskind’s name appears on two US patents pertaining to expression recognition, so it’s a fair bet that’s where his talents are being applied in Apple’s research. His co-authored patents are:

- Collection of machine learning training data for expression recognition.

- Weak hypothesis generation apparatus and method, learning apparatus and method, detection apparatus and method, facial expression learning apparatus and method, facial expression recognition apparatus and method, and robot apparatus.

Before getting into some of the technicalities of Apple’s research paper, the most interesting question about Apple’s research into AI and human expression is why. What applications will this technology have and for what purposes?

Mapping human expression and emotion: What are the applications?

Tech Times speculated on how Apple could use Emotient’s technology in an article written two days after the acquisition. The publication pointed out that:

• Emotient’s technology is used to help advertisers in assessing the reactions of viewers to their advertisements.

• Doctors use the startup’s technology to interpret the pain of patients who cannot express themselves.

• The company said one of its clients, a retailer, used the AI technology to check the reactions of buyers on the products in its stores.

Right off the bat, there are three recognized applications; advertising, healthcare, and retail; however, this technology has far, far broader potential.

One can imagine using this technology to read expression for law enforcement, for example, or even to create a database of every human expression, which could then be used to find out how any person on the planet is feeling, and to a lesser extent, what they are thinking.

Think of the TV show “Lie to Me,” but replace the main characters with AI — Artificial Intelligence forensics for detecting false testimonies — now there’s an interesting research topic!

The technology could also be used to flawlessly mimic human expression via Artificial Intelligence, and this has great potential for abuse, especially if used in combination with facial and vocal manipulation software.

Read More: Is there nothing that can’t be faked with vocal, facial manipulation?

By mapping human expression, Apple could unleash a power that would be capable of imitating, mimicking, or manipulating any human recording ever made, audio or video.

Making sense of Apple’s research

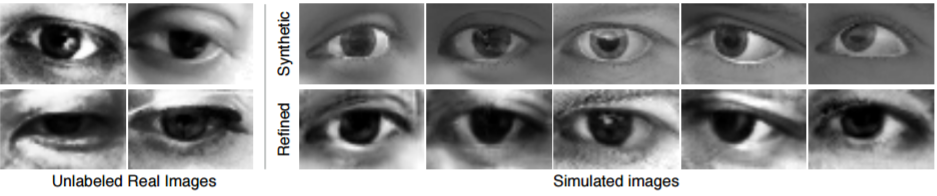

Without ever mentioning the words “emotion” or “expression,” almost all the images that appear in Apple’s AI research paper, synthetic or otherwise, depict illustrations of human eyes or hand gestures.

The paper demonstrates the company’s ambition “to train models on synthetic images, potentially avoiding the need for expensive annotations.” The research proposes Simulated + Unsupervised (S+U) learning “to learn a model that improves the realism of synthetic images from a simulator using unlabeled real data.”

In other words, by using “a refiner network to add realism to synthetic images,” according to the report, the improved realism will enable “the training of better machine learning models on large datasets without any data collection or human annotation effort.”

The researchers at Apple developed what they called the “Visual Turing Test to quantitatively evaluate the visual quality of the refined images,” in which “the subjects were constantly shown 20 examples of real and refined images while performing the task. The subjects found it very hard to tell the difference between the real images and the refined images [italics mine].”

Beyond Face Swapping

Last June, TechCrunch reported, “According to Apple, your phone does more than 11 billion computations per photo to recognize who and what is featured in the image.” The context was the launch of Apple’s Photos app for iOS 10.

With over 11 billion computations for just organizing photos, one can only imagine how many computations can take place using AI for analyzing human expression. Once human expression is mapped, it will be easier to imitate human emotion, and taking it a step beyond, consciousness — the dream of AI research since its conception.

Read More: Can an AI collective subconscious exist? Inside HBO’s new drama Westworld

Read More: DNA could be the code to unlocking Artificial Intelligence consciousness

Through Apple’s research, the tech giant is taking steps to make machines more human, but this opens up the floodgates for potential abuse at a massive scale, just as Microsoft has taken steps to “democratize AI.”

Read More: Microsoft’s mission to democratize AI must resist temptation for oligarchy

Apple was also a name that left a giant gap in the list of tech companies that formed “The Partnership on AI” that included Google, Deep Mind, IBM, Amazon, Microsoft, and Facebook.

Apart from acquiring startups, Apple’s decision to go-it-alone in the field of AI sets it apart from the other big players on the field. The reason for not joining the Partnership on AI could be a way to set the company apart from the rest, both in research capabilities and technology, and maybe even politically.

Read More: Partnership on AI vs OpenAI: Consolidation of Power vs Open Source

Every tech company agrees that AI is the future, but each company differs in its approach. What will be Apple’s legacy on AI? Will ethics be at the forefront of mapping human expression, or will it give way to money and power? Exciting developments are sure to await, for good or ill, in 2017.