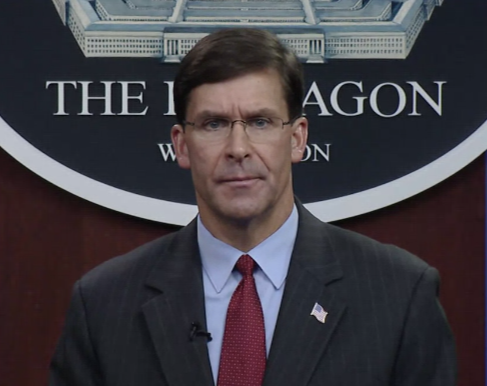

The Pentagon’s Joint Artificial Intelligence Center (JAIC) will launch the first-ever AI partnership for defense with military and defense organizations from more than 10 nations, US Secretary of Defense Mark Esper announces at the DoD AI Symposium.

“Next week, the JAIC will launch the first-ever AI partnership for defense to engage military and defense organizations from more than 10 nations with a focus on incorporating ethical principles into the AI delivery pipeline” — Mark Esper

Delivering the keynote at the virtual DoD AI Symposium today, Secretary of Defense Esper announced that the JAIC will be launching the AI partnership for defense next week in an effort to promote ethical AI use among allies and partners.

“Next week, the JAIC will launch the first-ever AI partnership for defense to engage military and defense organizations from more than 10 nations with a focus on incorporating ethical principles into the AI delivery pipeline,” said Esper.

“Over the coming year, we expect to expand this initiative to include even more countries as we create new frameworks and tools for data sharing, cooperative development, and strengthened interoperability,” he added.

“We are pioneering a vision for emerging technology that protects the US Constitution and the sacred rights of all Americans” — Mark Esper

The upcoming AI partnership for defense is a response to the growing and ever-present threat of the Chinese Communist Party’s authoritarian use of AI that has created a surveillance state within its own borders while seeking to export its Orwellian capabilities to autocratic governments around the world.

“The contrast between American leadership on AI and that of Beijing and Moscow couldn’t be clearer — we are pioneering a vision for emerging technology that protects the US Constitution and the sacred rights of all Americans,” Esper declared.

“Abroad, we seek to promote the adoption of AI in a manner consistent with the values we share with our allies and partners — individual liberty, democracy, human rights, and respect for the rule of law — just to name a few.”

“If there is any doubt as to how the CCP would wield AI to influence its agenda abroad, look no further than how it uses this capability at home” — Mark Esper

Earlier in his keynote, the secretary of defense explained how and why China’s authoritarian use of AI was bad for the entire world.

“If there is any doubt as to how the CCP would wield AI to influence its agenda abroad, look no further than how it uses this capability at home,” he said.

“Beijing is constructing a 21st Century surveillance state designed to wield unprecedented control over its own people.”

Forced facial scanning of all citizens registering mobile phones, DNA phenotyping to profile an entire ethnic population for identification and detention, predictive policing by algorithm, forced sterilization and birth control, massive surveillance, and censorship are just some of the many abuses Communist China is perpetrating against its own people, according to media reports old and new.

Esper went on to say, “With hundreds of millions of cameras strategically located across the country and billions of data points generated by the Chinese Internet of Things, the CCP will soon be able to identify almost anyone entering a public space and censor dissent in real-time.

“More troubling is the fact that these systems can be used to invade private lives, leaving no text message, internet search, purchase, or personal activity free from Beijing’s tightening grip,” he added.

I would argue the same could be said about Google and other tech platforms in the US.

The secretary of defense also referenced how the People’s Republic of China (PRC) is using AI to repress its Muslim Uyghur population in Xinjiang, as well as quash dissent among pro-democracy protesters in Hong Kong.

“As China scales this technology, we fully expect it to sell these capabilities abroad, enabling other autocratic governments to move toward a new era of digital authoritarianism” — Mark Esper

“As we speak, the PRC is deploying and honing its AI surveillance apparatus to support the targeted repression of its Muslim Uyghur population,” said Esper.

“Likewise, pro-democracy protesters in Hong Kong are being identified, seized, imprisoned, or worse by the CCP’s digital police state unencumbered by privacy laws or ethical governing principles.”

But the authoritarian use of AI doesn’t stop at the Chinese border. Esper warned that China would be selling its Orwellian surveillance technology and tactics to repressive governments around the world.

“As China scales this technology, we fully expect it to sell these capabilities abroad, enabling other autocratic governments to move toward a new era of digital authoritarianism,” he said.

Esper’s words echo that of US Deputy Secretary of State Stephen Biegun, who testified last month before the Senate Committee on Foreign Relations that the Chinese Communist Party’s predatory economic practices were “enabling the rule of autocrats and kleptocrats globally,” and that China’s authoritarian regime was using its technology to repress the Muslim Uyghur population while cracking down on all dissidents.

“The PRC exports technological know-how that can help authoritarian governments track, reward, and punish citizens through a system of digital surveillance,” Biegun submitted.

“A key element of the PRC’s strategy is to provide political, technological, and economic support to those who are willing to turn a blind eye to the PRC’s lucrative deals at the expense of the citizens of developing nations, thereby enabling the rule of autocrats and kleptocrats globally,” he added.

The JAIC was launched in June, 2018 to enhance the ability for DoD components to execute new AI initiatives, experiment, and learn within a common framework.

In February, 2020 the Pentagon formally adopted a set of AI ethical principles. They include:

- Responsible: DoD personnel will exercise appropriate levels of judgment and care, while remaining responsible for the development, deployment, and use of AI capabilities.

- Equitable: The Department will take deliberate steps to minimize unintended bias in AI capabilities.

- Traceable: The Department’s AI capabilities will be developed and deployed such that relevant personnel possess an appropriate understanding of the technology, development processes, and operational methods applicable to AI capabilities, including with transparent and auditable methodologies, data sources, and design procedure and documentation.

- Reliable: The Department’s AI capabilities will have explicit, well-defined uses, and the safety, security, and effectiveness of such capabilities will be subject to testing and assurance within those defined uses across their entire life-cycles.

- Governable: The Department will design and engineer AI capabilities to fulfill their intended functions while possessing the ability to detect and avoid unintended consequences, and the ability to disengage or deactivate deployed systems that demonstrate unintended behavior.

According to the Pentagon, “These principles will apply to both combat and non-combat functions and assist the US military in upholding legal, ethical and policy commitments in the field of AI.”

AI will permeate the Pentagon ‘from cyberspace to outer space, everywhere between’: JAIC director

First they came for the Uighurs: China’s abuse of technology and their justifications